You are here: Foswiki>AGLT2 Web>BenchMarks>Md3460Benchmark (16 Nov 2014, BenMeekhof)Edit Attach

ZFS and RAID-10 Benchmarks for Dell MD3460

Common Configuration notes

Tools

Bonnie++: 1.96-8.el7 (epel) Iozone: 3.424-2.el7.rf (rpmforge)Host system:

- Intel(R) Xeon(R) CPU E5-2660 v2 @ 2.20GHz

- Mem: 256GB

- LSI Logic / Symbios Logic SAS3008 PCI-Express Fusion-MPT SAS-3 (rev 02)

- Scientific Linux 7

Storage

- Dell MD3460, dual-active(?) RAID controllers at firmware 08.10.14.60

- 60 x ST4000NM0023 (4TB rated, 3.7 Tib actual). Firmware GS0F .

Host Software

Package "device-mapper-multipath" was installed on system, and command "multipathconf" run to get an initial config (creates /etc/multipath/.multipath.conf.tmp). Then edited file to add Dell specific things following example from already configured R720 system setup at CERN. Presumably these changes are noted in the system setup documentation as well. The complete multipath.conf file is attached to this page. Even after setting this up I wasn't seeing any of the LUNs I assigned to the system (see below for more about LUN assignment). Seemed like I needed whatever setup/packages/etc that might be setup by the resource DVD. The DVD happens to have been recently updated for RHEL7. So, I mounted it and ran the setup program with the full install. It required installing a lot of X11 stuff - console install wouldn't trigger even though the docs say it should if DISPLAY is not set. After reboot, "multipath -l" shows the two LUNS I had setup at that point. I don't really know everything the installer did.[root@umfs11 ~]# multipath -l mpathb (3600a0980005de743000007ab5462d401) dm-6 DELL ,MD34xx size=3.6T features='3 queue_if_no_path pg_init_retries 50' hwhandler='1 rdac' wp=rw |-+- policy='round-robin 0' prio=0 status=active | `- 1:0:0:0 sdb 8:16 active undef running `-+- policy='round-robin 0' prio=0 status=enabled `- 1:0:1:0 sdd 8:48 active undef running mpatha (3600a0980005de743000007ae5462d408) dm-5 DELL ,MD34xx size=3.6T features='3 queue_if_no_path pg_init_retries 50' hwhandler='1 rdac' wp=rw |-+- policy='round-robin 0' prio=0 status=active | `- 1:0:1:1 sde 8:64 active undef running `-+- policy='round-robin 0' prio=0 status=enabled `- 1:0:0:1 sdc 8:32 active undef running

Testing ZFS

MD3460 Array Config

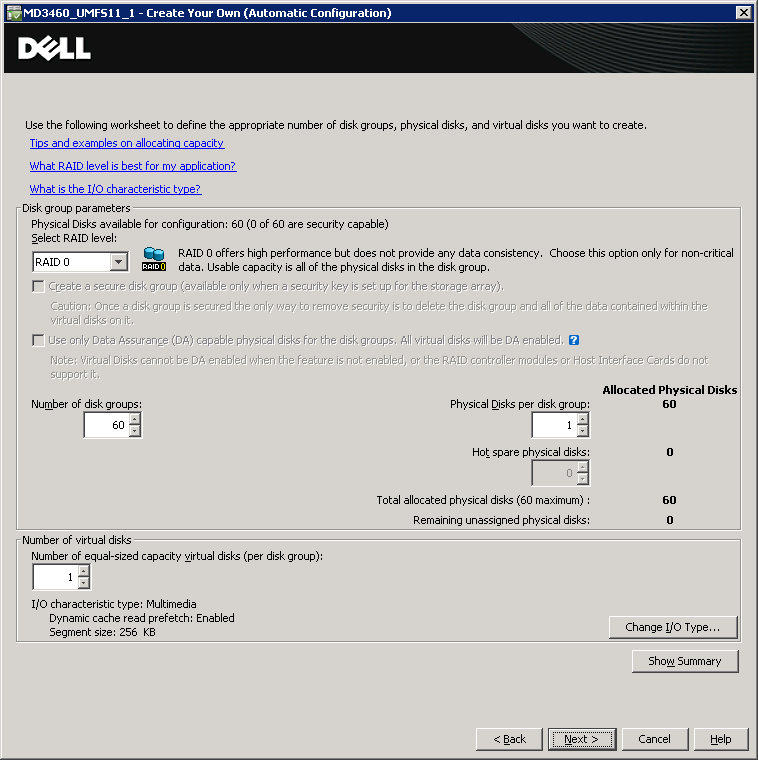

Since the MD3460 is mostly intended to be used in a RAID configuration the only way to get JBOD is to create 60 RAID-0 volumes. This is easy to do in one batch with the disk group wizard. Here's a screenshot of the config I assigned:

ZFS config

To install ZFS:- Install/enable EPEL and ZFS repositories

- Install packages: yum install kernel-devel zfs

- Module dkms builds automatically at install

- Did "modprobe zfs"

- On to configuring pools

zpool create sc14 \ mirror mpatha mpathb \ mirror mpathc mpathd \ .... and so on to use all 60 disksWe end up with a pool of 108 TB usable space:

Filesystem Size Used Avail Use% Mounted on

sc14 108T 128K 108T 1% /sc14

[root@umfs11 ~]# zpool status

pool: sc14

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

sc14 ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

mpatha ONLINE 0 0 0

mpathb ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

mpathc ONLINE 0 0 0

mpathd ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

mpathe ONLINE 0 0 0

mpathf ONLINE 0 0 0

.....etc....

RAID10 Config

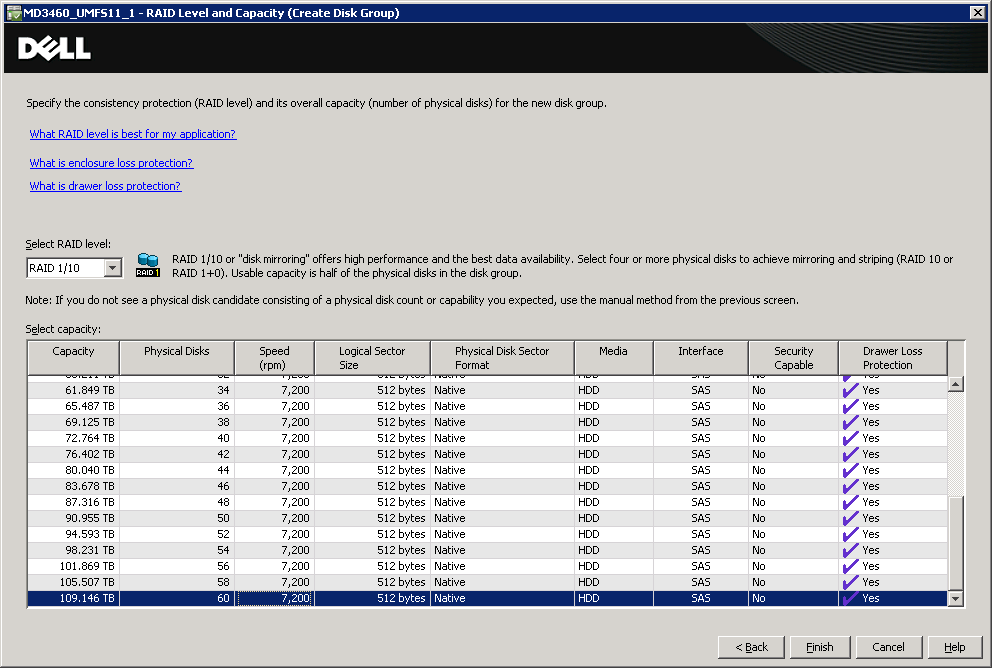

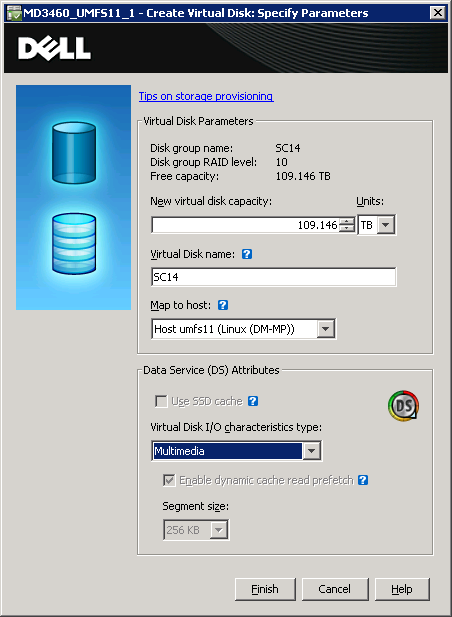

I chose to use the automatic provisioning presets when creating the RAID10 disk group. As it happens this generates a config consisting of 30 mirrored pairs (60 disks) which is exactly what we did with ZFS. Screenshots:

Then on umfs11 I made an XFS filesystem using defaults:

mkfs.xfs -LSC14 /dev/mapper/mpathbiMounted at /sc14:

Filesystem Size Used Avail Use% Mounted on /dev/mapper/mpathbi 110T 36M 110T 1% /sc14

Bonnie tests

Command used: bonnie++ -d /sc14 -s 515480:1024k -q -u root > bonnie-test1.outZFS

bonnie-test1.out is attached. Below is the conversion to html using bon_csv2html:| Version 1.96 | Sequential Output | Sequential Input | Random Seeks | Sequential Create | Random Create | ||||||||||||||||||||||

| Size | Chunk Size | Per Char | Block | Rewrite | Per Char | Block | Num Files | Create | Read | Delete | Create | Read | Delete | ||||||||||||||

| K/sec | % CPU | K/sec | % CPU | K/sec | % CPU | K/sec | % CPU | K/sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | ||||

| 515480M | 1m | 161 | 99 | 867205 | 30 | 660077 | 32 | 390 | 99 | 1674493 | 45 | 349.2 | 68 | 16 | 21122 | 96 | +++++ | +++ | 25714 | 99 | 18824 | 97 | +++++ | +++ | 25616 | 99 | |

| Latency | 6377ms | 4111us | 427ms | 2767ms | 365ms | 92365us | Latency | 31437us | 308us | 342us | 64313us | 17us | 85us | ||||||||||||||

Hardware RAID-10

Generated from attached bonnie-test-r10.out with bon_csv2html:| Version 1.96 | Sequential Output | Sequential Input | Random Seeks | Sequential Create | Random Create | ||||||||||||||||||||||

| Size | Chunk Size | Per Char | Block | Rewrite | Per Char | Block | Num Files | Create | Read | Delete | Create | Read | Delete | ||||||||||||||

| K/sec | % CPU | K/sec | % CPU | K/sec | % CPU | K/sec | % CPU | K/sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | ||||

| 515480M | 1m | 1823 | 97 | 1118965 | 72 | 452317 | 36 | 3334 | 98 | 1006001 | 34 | 130.2 | 69 | 16 | 11433 | 18 | +++++ | +++ | 18154 | 26 | 11768 | 18 | +++++ | +++ | 18610 | 26 | |

| Latency | 566ms | 32961us | 1984ms | 343ms | 362ms | 256ms | Latency | 7894us | 124us | 111us | 394us | 43us | 72us | ||||||||||||||

Iozone tests

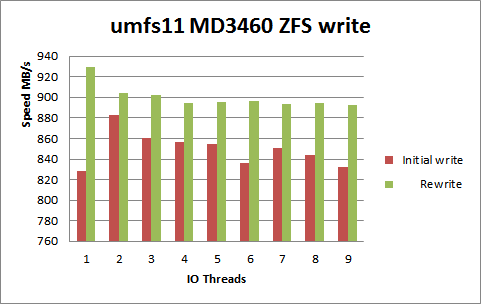

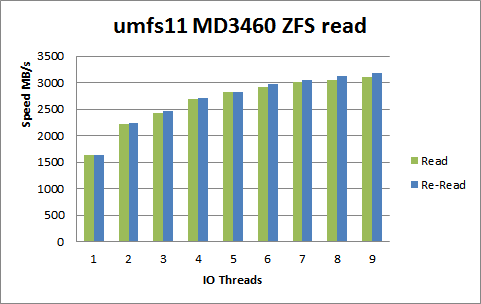

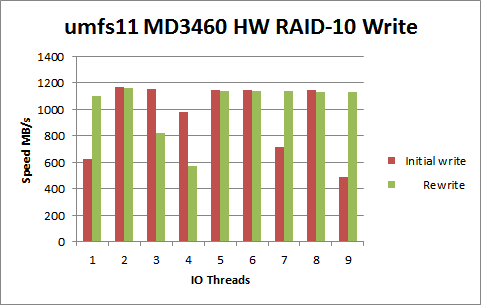

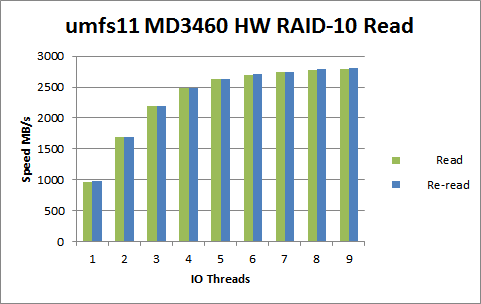

Spreadsheet with data points used to generate these graphs is attached along with the iozone output. I had to stop at 9 threads due to time constraints. Command used: iozone -Rb iozone-umfs11-zfs.xls -r512k -s512G -i0 -i1 -l1 -u12 -P1 -F /sc14/ioz1 /sc14/ioz2 /sc14/ioz3 /sc14/ioz4 /sc14/ioz5 /sc14/ioz6 /sc14/ioz7 /sc14/ioz8 /sc14/ioz9 /sc14/ioz10 /sc14/ioz11 /sc14/ioz12ZFS

Hardware RAID-10

-- BenMeekhof - 11 Nov 2014

-- BenMeekhof - 11 Nov 2014

| I | Attachment | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

bonnie-test-r10.out | manage | 245 bytes | 16 Nov 2014 - 22:31 | BenMeekhof | Hardware RAID-10 Bonnie++ output |

| |

bonnie-test1.out | manage | 247 bytes | 12 Nov 2014 - 20:00 | BenMeekhof | |

| |

iozone-r10.out | manage | 15 K | 16 Nov 2014 - 22:32 | BenMeekhof | Hardware RAID-10 iozone text output |

| |

iozone-umfs11-r10.xlsx | manage | 13 K | 16 Nov 2014 - 23:01 | BenMeekhof | |

| |

iozone-umfs11-zfs.xlsx | manage | 12 K | 13 Nov 2014 - 21:42 | BenMeekhof | |

| |

jbod-config.png | manage | 37 K | 11 Nov 2014 - 19:43 | BenMeekhof | |

| |

multipath.conf | manage | 5 K | 11 Nov 2014 - 21:42 | BenMeekhof | |

| |

r10-read.png | manage | 13 K | 16 Nov 2014 - 23:02 | BenMeekhof | |

| |

r10-write.png | manage | 13 K | 16 Nov 2014 - 23:02 | BenMeekhof | |

| |

raid10-config.png | manage | 46 K | 13 Nov 2014 - 22:09 | BenMeekhof | |

| |

raid10-vd-config.png | manage | 35 K | 13 Nov 2014 - 22:09 | BenMeekhof | |

| |

umfs11-iozone.out | manage | 13 K | 13 Nov 2014 - 21:43 | BenMeekhof | ZFS Iozone test output |

| |

zfs-read.png | manage | 12 K | 13 Nov 2014 - 21:40 | BenMeekhof | |

| |

zfs-write.png | manage | 12 K | 13 Nov 2014 - 21:40 | BenMeekhof | |

| |

zpool-create.sh | manage | 756 bytes | 12 Nov 2014 - 19:03 | BenMeekhof |

Edit | Attach | Print version | History: r6 < r5 < r4 < r3 | Backlinks | View wiki text | Edit wiki text | More topic actions

Topic revision: r6 - 16 Nov 2014, BenMeekhof

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback