The COVID-19 High Performance Computing Consortium (link)

AGLT2 is joining the fight and allocating up to 50% of its job slots to COVID-19 research as part of the Open Science Grid (OSG) effort - see current status

AGLT2 provides more than 2,500 multi-core job slots (12,000 cores) and over 7 Petabytes of storage for ATLAS physics computing. Site infrastructure services for job management, storage management, and interfacing with the ATLAS computing cloud are managed at UM and computing/storage resources are located both at UM and at MSU.

To outside users of our site we appear as one entity. The collaboration between UM and MSU allows our site to provide double the resources than would otherwise be possible and increase our redundancy in the event that either site is unavailable.

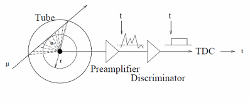

To determine calibration compensations for ATLAS Monitored Drift Tubes a special data stream is sent to calibration centers in Michigan, Rome, and Munich.

Storage, CPU, and human resources at AGLT2 are dedicated to MDT calibration including dedicated hosts to process calibration data, a database replicated to CERN, and custom AGLT2 authored tools. The ATLAS calibration process occurs every day there is a beam in the LHC.

OSiRIS or MI-OSiRIS (Multi-Institutional Open Storage Research Infrastructure) will combine a number of innovative concepts to provide a distributed, multi-institutional storage infrastructure that will allow researchers at any of our three campuses to read, write, manage and share their data directly from their computing facility locations.

Our goal is to provide transparent, high-performance access to the same storage infrastructure from well-connected locations on any of our campuses. We intend to enable this via a combination of network discovery, monitoring and management tools and through the creative use of CEPH features.

By providing a single data infrastructure that supports computational access on the data "in-place", we can meet many of the data-intensive and collaboration challenges faced by our research communities and enable these communities to easily undertake research collaborations beyond the border of their own Universities.

OSiRIS is a collaboration between University of Michigan, Wayne State University, Michigan State University, and Indiana University funded by the NSF under award number 1541335. Many other institutions will collaborate as scientific users as the project becomes established.

Pythia Network Diagnosis Infrastructure

PuNDIT will integrate and enhance several software tools needed by the High Energy Physics (HEP) community to provide an infrastructure for identifying, diagnosing and localizing network problems. In particular, the core of PuNDIT is the Pythia tool that uses perfSONAR data to detect, identify and locate network performance problems. The Pythia algorithms, originally based on one-way latency and packet loss, will be re-implemented incorporating the lessons learned from its first release and augmenting those algorithms with additional metrics from perfSONAR throughput and traceroute measurements. The PuNDIT infrastructure will build upon other popular open-source tools including smokeping, ESnet's Monitoring and Debugging Dashboard (MaDDash) and the Open Monitoring Distribution (OMD) to provide a user-friendly network diagnosis framework.

The PuNDIT research project is a collaboration between Georgia Tech and University of Michigan funded by the National Science Foundation under award numbers 1440571 and 1440585.

SDN Optimized High-Performance Data Transfer Systems for Exascale Science

This demonstration focuses on network path-building and flow optimizations using SDN and intelligent traffic engineering techniques.

LHCONE Point2point Service with Data Transfer Nodes

A network services model that matches the requirements of LHC high energy physics research with emerging capabilities for programmable networking