You are here: Foswiki>AGLT2 Web>NexsanTesting (27 May 2011, BenMeekhof)Edit Attach

Evaluation and testing of Nexsan SATABeast with B60E expansion

Unpacking and Installation

See photos and some comments here: https://picasaweb.google.com/ben.meekhof/NexSanEvaluationInitial setup - host system setup

Host system is umfs03.- Kernel 2.6.38-UL1

- 2 x Qlogic QLE2562 (dual ports, 8Gb/s)

- 2 x 6 core Intel(R) Xeon(R) CPU X5670 @ 2.93GHz with HT enabled

- 64GB 1333Mhz RAM

Multipathing setup

See Nexsan multipathing configuration documentation for actual config used- prio_callout directive changed to "prio alua" in our version of multipath

- aliases set in multipath.conf in order of lowest to highest LUN (mpatha-mpathh)

The Nexsan SATABeast and B60E are connected to our Dell headnode via 2 QLogic ISP2532 8Gb FC HBAs. Each HBA has 2 channels (to attach to the 2 different controllers on the SATABeast). We are running the Nexsan with All LUNS available on all paths. That means the host system can see 4 different paths to each storage location. We need to properly configure UMFS03 with Linux Multipathing to manage the possible paths to the storage locations. There are a number of relevant links to check below as references. Since we are running a kernel > 2.6.32 we need to update our

device-mappper-multipath RPM to something newer than 0.4.7 which is available for SL5.x. I found device-mapper-multipath-0.4.9-23.0.8.el5.src.rpm at http://oss.oracle.com/el5/SRPMS-updates/ and it rebuilds fine on UMFS03.

I installed the resulting 3 RPMS: device-mapper-multipath-0.4.9-23.0.8.el5.x86_64.rpm, device-mapper-multipath-libs-0.4.9-23.0.8.el5.x86_64.rpm and kpartx-0.4.9-23.0.8.el5.x86_64.rpm on UMFS03 via rpm -Uvh .

This link has a quick overview on setting up multipathing on linux: http://jang.blogs.ilrt.org/2009/03/27/braindump-of-fc-multipathing-on-linux/. There are some older relevant links at http://www.areasys.com/pdf/dataon/dns1x00/DM0002.pdf and

http://www.dell.com/downloads/global/power/ps3q06-20060189-Michael.pdf

First thing to do was to edit the /etc/multipath.conf and add a blacklist_expection:

/dev/sda is in use we leave it blacklisted but allow any /dev/sd[b-z] devices (which will be the Nexsan LUNs). (NOTE this was over-ridden by the new find_multipath option).

Once the unit had configured the LUNs (see below) we were able to see the multipath setup via multipath -v2 -d.

Next we chkconfig multitpathd on so it can startup on reboots.

I list the details on configuring /etc/multipath.conf in configuring multipath.conf.

Once the multipath was setup we have one /dev/dm-n device per configured LUN (8 in our case). I then used a simple script to create 8 mount points and format the LUNs with XFS. Doing mount -a -t xfs then resulted in these mounts:

/dev/mapper/mpatha 22T 34M 22T 1% /dcache /dev/mapper/mpathb 22T 34M 22T 1% /dcache1 /dev/mapper/mpathc 22T 34M 22T 1% /dcache2 /dev/mapper/mpathd 19T 129G 19T 1% /dcache3 /dev/mapper/mpathe 19T 34M 19T 1% /dcache4 /dev/mapper/mpathf 19T 34M 19T 1% /dcache5 /dev/mapper/mpathg 19T 34M 19T 1% /dcache6 /dev/mapper/mpathh 19T 34M 19T 1% /dcache7

Changes for test series 2 (Nexsan recommended)

Filesystems were re-created with explicit sunit,swidth:For 12 disk vols on SATABeast main chassis:

mkfs.xfs -f -L umfs03_3 -d sunit=256,swidth=3072 /dev/mapper/mpathcFor 14 disk vols on B60E:

mkfs.xfs -f -L umfs03_4 -d sunit=256,swidth=2560 /dev/mapper/mpathdMounts as above.

Initial setup - Nexsan system

- Management: https://192.41.230.194 or https://10.10.1.194

- Disk Model: Hitachi HUA722020ALA330, Capacity: 2000399 MB, Firmware: JKAOA3EA, Type: SATA

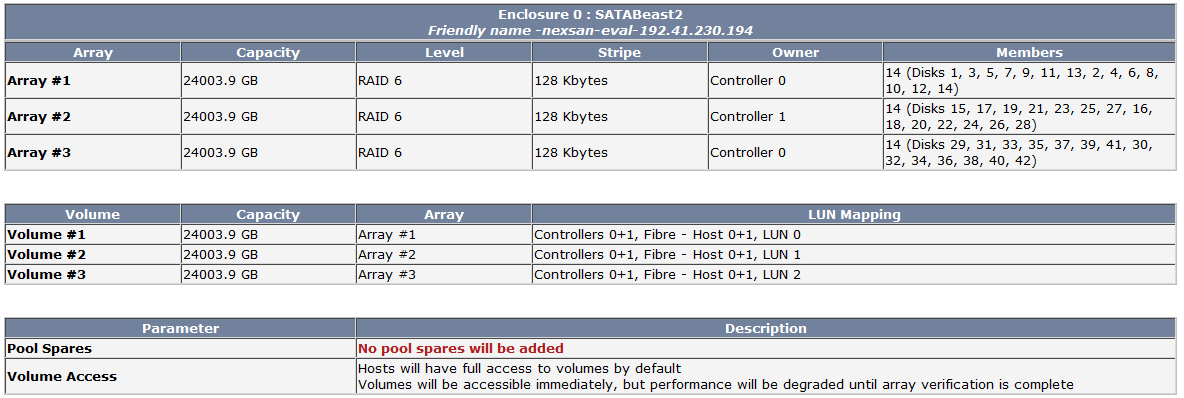

- SATAbeast - 3 x 14 disk RAID6 (21.8 TiB/volume, total 65.4 TiB - metric 24TB/volume, total 72TB)

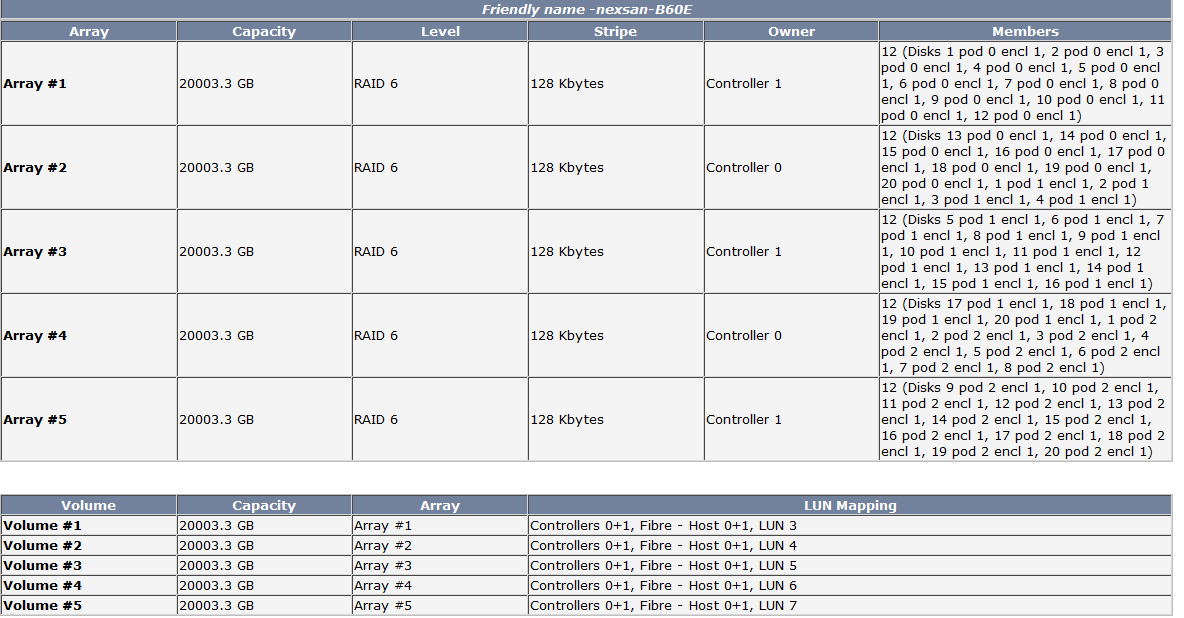

- B60 - 5 x 12 disk RAID6 (18.1 TiB/volume, total 90.5 TiB - metric 20TB/volume, total 100TB)

- AutoMAID disabled

- APAL Enclosure mode

- Write cache enabled, FUA off, 1983MB per controller (mirrored)

- Cache optimization setting: Mixed sequential/random

- Cache streaming mode off

- Host fiber access mode: Point to Point

- Initial array building step (simultaneous for all) started 4-30-2011 12:30pm, finished XXXX

Dual controllers can be setup in non-redundant mode or using multipath in All Ports All Luns mode (APAL). We plan to test with APAL mode enabled.

Screenshots

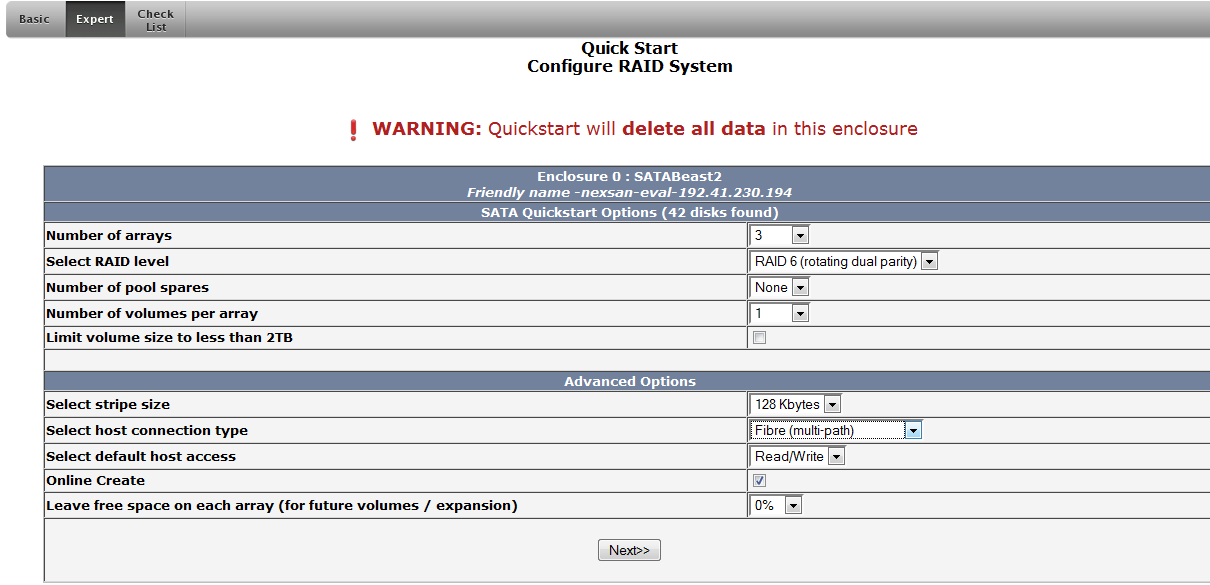

- Screenshot of quickstart setup:

- Configuration created:

- B60 expansion configuration:

Test Results

- According to Nexsan, the standalone version of the B60 with controllers built in can achieve higher performance numbers than the SATABeast we evaluated:

"For the E60 w/ E60X SATA RAID5 sequential writes of more than 950MB/s or more than 1400MB/s (This is the difference of cache mirroring enabled/disabled) and sequential reads of more than 2500MB/s"

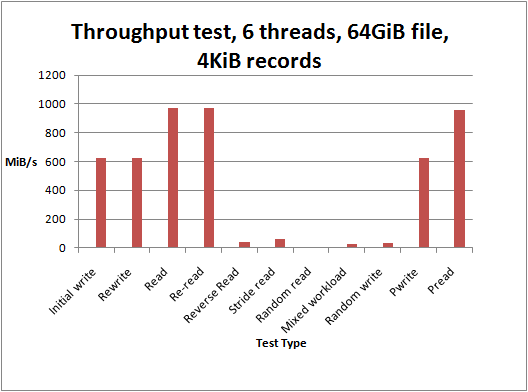

Throughput with all tests, 64GiB file, 4KiB records, 6 threads throughput_test4_alltests_6threads.xls, throughput_test4_alltests_6threads.out:

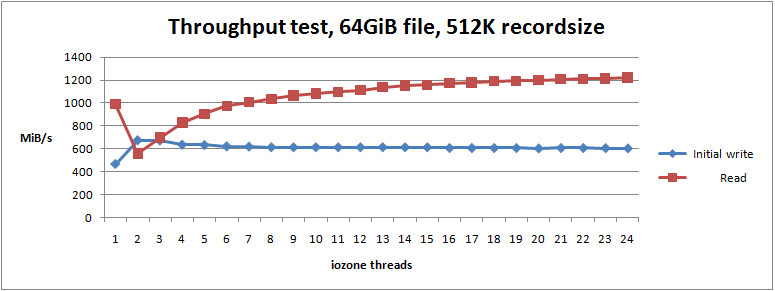

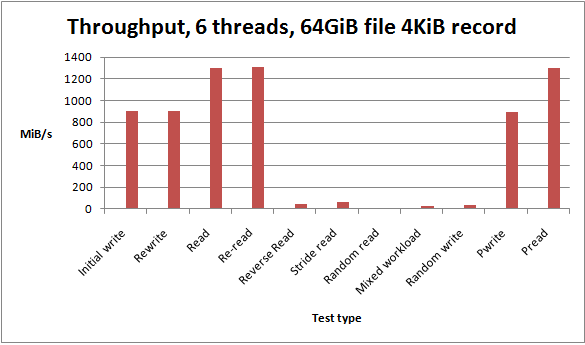

Test Results - series 2

- Changes made from series 1:

- Write cache changed to non-mirrored mode

- Cache optimization setting: Sequential

- sunit,swidth on XFS as noted previously

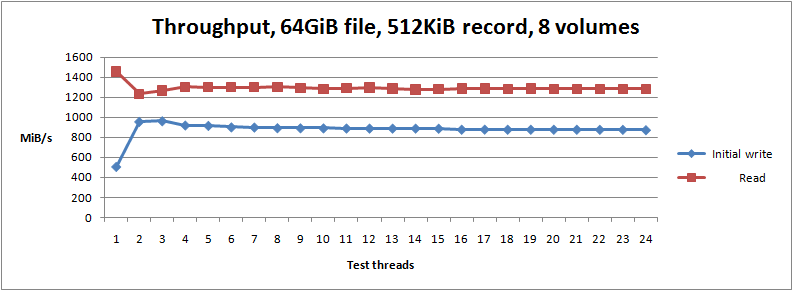

Cache streaming mode enabled for next test

Throughput test, 64GiB file, 512KiB record, up to 24 threads, series2: throughput_test6_reconfig.xls, throughput_test6_reconfig.out

Scripts/files used

- format_Nexsan.sh: Bash script to format Nexan LUNs and create /dcacheN mount points

- fss-setup-filesystems-Nexsan-rec.sh: xfs format for series 2

- multipath.conf: multipathing setup used for tests

| I | Attachment | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

Nexsan_Linux_Multipathing-V1.pdf | manage | 770 K | 27 May 2011 - 13:29 | BenMeekhof | Nexsan multipathing directions |

| |

fss-setup-filesystems-Nexsan-rec.sh | manage | 592 bytes | 17 May 2011 - 13:49 | BenMeekhof | xfs format for series 2 |

| |

multipath.conf | manage | 4 K | 17 May 2011 - 19:52 | BenMeekhof | multipathing setup used for tests |

| |

nexsan-b60-setup.png | manage | 52 K | 30 Apr 2011 - 15:47 | BenMeekhof | B60 expansion configuration |

| |

nexsan-sb-quickstart.png | manage | 37 K | 30 Apr 2011 - 15:46 | BenMeekhof | Screenshot of quickstart setup |

| |

nexsan-sb-setup.png | manage | 33 K | 30 Apr 2011 - 15:46 | BenMeekhof | Configuration created |

| |

sample_test.out | manage | 19 K | 01 May 2011 - 19:57 | BenMeekhof | iozone output of test |

| |

sample_test.xlsx | manage | 3 K | 01 May 2011 - 19:48 | BenMeekhof | Quick test of throughput with 1GB file (raid init ongoing). |

| |

test_throughput_1GB.png | manage | 20 K | 01 May 2011 - 19:34 | BenMeekhof | Graph of 1GB throughput test |

| |

throughput_test3.out | manage | 19 K | 06 May 2011 - 21:16 | BenMeekhof | Throughput test, 64GiB file, up to 24 threads, iozone output |

| |

throughput_test3.png | manage | 14 K | 06 May 2011 - 21:16 | BenMeekhof | Throughput test, 64GiB file, up to 24 threads, graph image |

| |

throughput_test3.xls | manage | 24 K | 07 May 2011 - 02:44 | BenMeekhof | Throughput test, 64GiB file, up to 24 threads |

| |

throughput_test4.png | manage | 14 K | 09 May 2011 - 15:59 | BenMeekhof | Throughput with all tests, 64GiB file, 4KiB records, 6 threads |

| |

throughput_test4_alltests_6threads.out | manage | 5 K | 09 May 2011 - 15:59 | BenMeekhof | Throughput with all tests, 64GiB file, 4KiB records, 6 threads |

| |

throughput_test4_alltests_6threads.xls | manage | 22 K | 09 May 2011 - 15:59 | BenMeekhof | Throughput with all tests, 64GiB file, 4KiB records, 6 threads |

| |

throughput_test5_all_reconfig.out | manage | 5 K | 16 May 2011 - 20:21 | BenMeekhof | Throughput with all tests after recommended configuration changes |

| |

throughput_test5_all_reconfig.png | manage | 14 K | 16 May 2011 - 20:25 | BenMeekhof | Throughput with all tests after recommended configuration changes |

| |

throughput_test5_all_reconfig.xls | manage | 22 K | 16 May 2011 - 20:21 | BenMeekhof | Throughput with all tests after recommended configuration changes |

| |

throughput_test6_reconfig.out | manage | 19 K | 17 May 2011 - 13:35 | BenMeekhof | Throughput test, 64GiB file, 512KiB record, up to 24 threads, series2 |

| |

throughput_test6_reconfig.png | manage | 13 K | 17 May 2011 - 13:35 | BenMeekhof | Throughput test, 64GiB file, 512KiB record, up to 24 threads, series2 |

| |

throughput_test6_reconfig.xls | manage | 25 K | 17 May 2011 - 13:35 | BenMeekhof | Throughput test, 64GiB file, 512KiB record, up to 24 threads, series2 |

Edit | Attach | Print version | History: r17 < r16 < r15 < r14 | Backlinks | View wiki text | Edit wiki text | More topic actions

Topic revision: r17 - 27 May 2011, BenMeekhof

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback