You are here: Foswiki>AGLT2 Web>MSUAdministration>MSULustreBasics (25 Oct 2019, ForrestPhillips)Edit Attach

Lustre Basics

Types of Servers & Block Storage

Management Server (MGS)

The MGS stores configuration information for all the Lustre file systems in a cluster and provides this information to other Lustre components. Each Lustre target contacts the MGS to provide information, and Lustre clients contact the MGS to retrieve information. Requirements- Memory: Not mentioned in documentation...

Management Target (MGT)

Block storage for the MGS, usually just a disk on the MGS machine. Even the most complex Lustre systems need less than 100 MB of block storage. Requirements- Storage: Less than 100 MB of storage is needed

Meta-data Server (MDS)

The MDS makes metadata stored in one or more MDTs available to Lustre clients. Each MDS manages the names and directories in the Lustre file system(s) and provides network request handling for one or more local MDTs. Requirements- Memory: Depends on the setup, but 16 GB looks like a good minimum.

- Total storage across MDTs: 1-2% of filesystem size (Example: if the filesystem is expected to have 1000 TB of storage, the MDT needs to have 10-20 TB of striped-mirrored storage, i.e., 20-40 TB of raw storage.)

- MDTs: 1-4 MDTs per MDS

Meta-data Target (MDT)

For Lustre software release 2.3 and earlier, each file system has one MDT. The MDT stores metadata (such as filenames, directories, permissions and file layout) on storage attached to an MDS. Each file system has one MDT. An MDT on a shared storage target can be available to multiple MDSs, although only one can access it at a time. If an active MDS fails, a standby MDS can serve the MDT and make it available to clients. This is referred to as MDS failover. Since Lustre software release 2.4, multiple MDTs are supported in the Distributed Namespace Environment (DNE). In addition to the primary MDT that holds the filesystem root, it is possible to add additional MDS nodes, each with their own MDTs, to hold sub-directory trees of the filesystem. Since Lustre software release 2.8, DNE also allows the filesystem to distribute files of a single directory over multiple MDT nodes. A directory which is distributed across multiple MDTs is known as a striped directory. Requirements- Storage: A single MDT can have up to 8 TB if using ldiskfs (Lustre's default file system) or up to 64 TB if using ZFS as the filesystem backend.

Object Storage Server (OSS)

The OSS provides file I/O service and network request handling for one or more local OSTs. Requirements- Memory: A good rule of thumb is 8 GB base memory + 3 GB of memory for each OST.

- OSTs: 1-32 OSTs per OSS, with a total capacity that does not exceed 256 TB.

Object Storage Target (OST)

User file data is stored in one or more objects, each object on a separate OST in a Lustre file system. The number of objects per file is configurable by the user and can be tuned to optimize performance for a given workload. Requirements- Storage: A single OST can have up to 256 TB

Clients

While technically not a server or block storage, clients are included for completeness. A client is any system that will access the Lustre filesystems, such as a login node or worker node. Requirements- Memory: At least 2 GB.

RAID vs. ZFS

RAID and ZFS are two tools that tackle the same goal, taking multiple physical disks and presenting them as one disk to the OS (called a RAID array or ZFS pool), or in this case Lustre. In addition, a fraction of the physical disks storage can be used to "backup" disk data. This means that some number of physical disks can fail/die without any loss of information. There are several configurations that RAID and ZFS have in common, as described here. A key difference between RAID and ZFS is that RAID only combines the physical disks together before handing the "virtual" or "logical" disk to the OS/filesystem, while ZFS also acts as the filesystem. So in the case of RAID, the filesystem has no knowledge about the physical disks that make up its logical disk, whereas ZFS does. This allows ZFS to do many useful things that RAID cannot, such as taking quick snapshots of the current filesystem. The biggest bonus that ZFS has over RAID (for us anyway) is that new disks can be added to a ZFS pool and old disks can be replaced with larger disks. This is not possible with RAID; once a RAID array is created, new storage cannot be added; if a disk is replaced with a larger disk, the new space is simply wasted. It is for these reasons that we plan on using ZFS to build our Lustre system.The Lustre Hierarchy

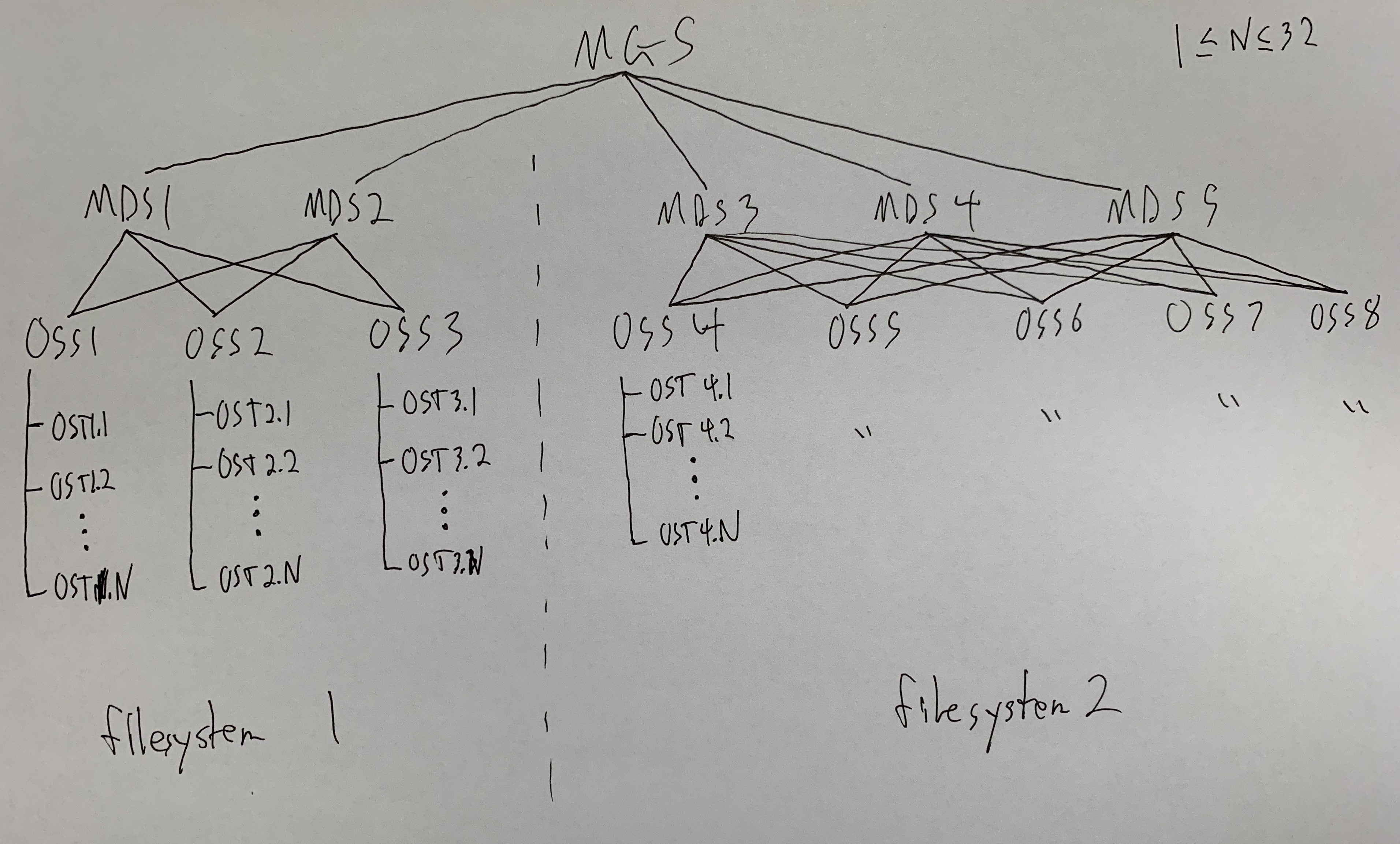

Below is a diagram that exemplifies the Lustre Hierarchy. It goes as follows:- At the very top is the MGS, which holds information about the Lustre system as a whole and all of the different filesystems defined in the system.

- After that the Lustre system is split into the different filesystems it serves.

- Each of those filesystems has some number of MDSs that host the meta-data of the files on that filesystem.

- Below that are the OSSs of the filesystem. This layer is fully connected with the layer above it. This means that while a file's meta-data is stored on one MDS, it can be striped across all of the OSSs in the pool. As opposed to a traditional hierarchy where, for example, MDS1 would serve only OSS1 and OSS2 while MDS2 would only serve OSS3 and OSS4. In this case, a file with its meta-data on MDS1 could only have its contents on OSS1 and OSS2. If somehow all the files a user was requesting were somehow only on MDS1, this could create a bottleneck at the OSS level.

- At the very bottom are the OSTs, which store the contents of the files.

Lustre Networking (LNet)

Edit | Attach | Print version | History: r4 < r3 < r2 < r1 | Backlinks | View wiki text | Edit wiki text | More topic actions

Topic revision: r4 - 25 Oct 2019, ForrestPhillips

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback