Michigan/AGLT2 SuperComputing 2015 Network Demonstrations

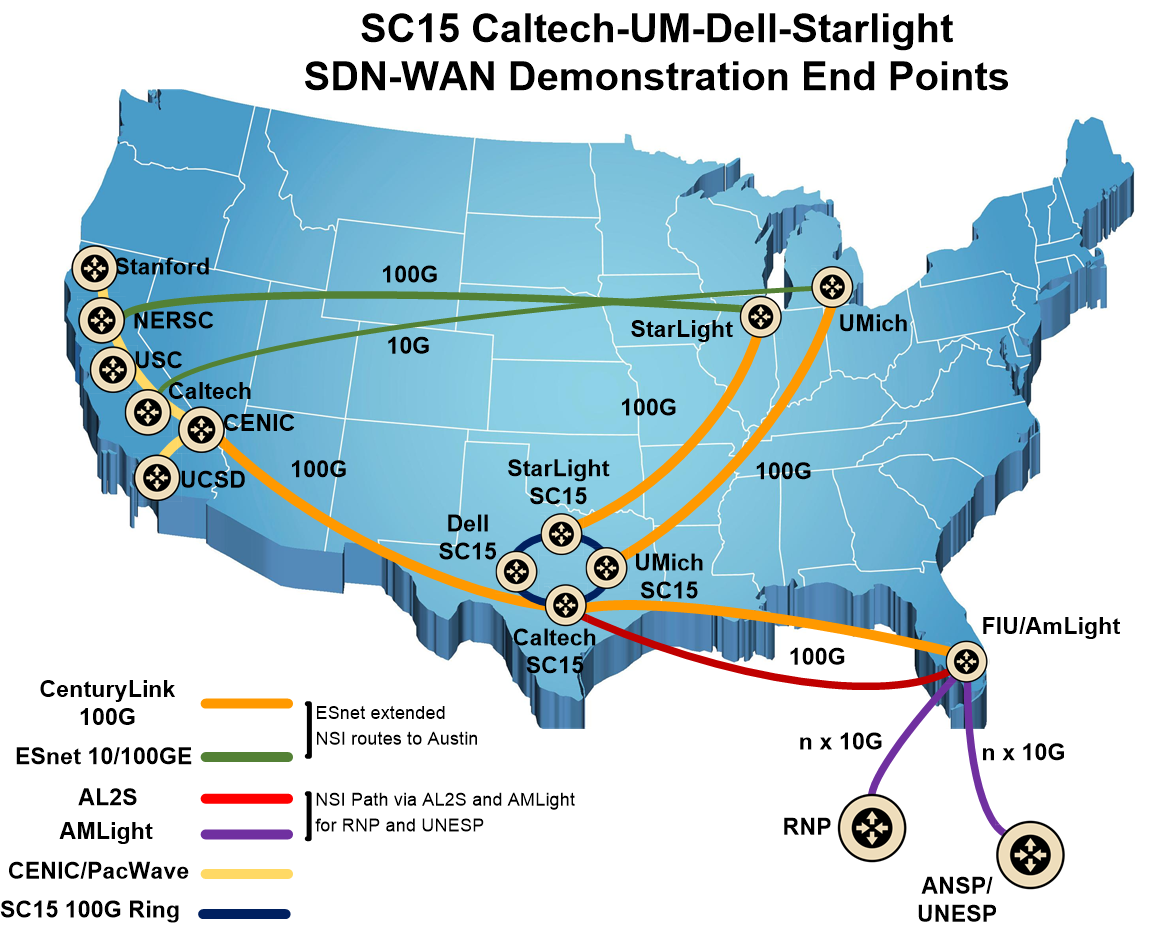

This year the University of Michigan and AGLT2 are again participating in SuperComputing 2015. The venue is Austin, Texas and it runs from November 16th through the 20th Below is a diagram showing our partners in the SDN Optimized High-Performance Data Transfer Systems for Exascale Science demonstration. Demo Description: The next generation of major science programs face unprecedented challenges in harnessing the wealth of knowledge hidden in Exabytes of globally distributed scientific data. Researchers from Caltech, FIU, Stanford, Univ of Michigan, Vanderbilt, UCSD, UNESP, along with other partner teams have come together to meet these challenges, by leveraging the recent major advances in software defined and Terabit/sec networks, workflow optimization methodologies, and state of the art long distance data transfer methods. This demonstration focuses on network path-building and flow optimizations using SDN and intelligent traffic engineering techniques, built on top of a 100G OpenFlow ring at the show site and connected to remote sites including the Pacific Research Platform (PRP). Remote sites will be interconnected using dedicated WAN paths provisioned using NSI dynamic circuits. The demonstrations include (1) the use of Open vSwitch (OVS) to extend the wide area dynamic circuits storage to storage, with stable shaped flows at any level up to wire speed, (2) a pair of data transfer nodes (DTNs) designed for an aggregate 400Gbps flow through 100GE switches from Dell, Inventec and Mellanox, and (3) the use of Named Data Networking (NDN) to distribute and cache large high energy physics and climate science datasets.

We are also participating in the LHCONE Point2point Service with Data Transfer Nodes demonstration with our LHCONE partners

Demo description: LHCONE (LHC Open Network Environment) is an globally distributed specialized environment in which the large volumes of LHC data are transferred among different international LHC Tier (data center and analysis) sites. To date these transfers are conducted over the LHC Optical Private Network (LHCOPN, dedicated high capacity circuits between LHC Tier1s (data centers)) and via LHCONE, currently based on L2+VRF services. The LHCONE Point2Point Service is planning to future-proof ways of networking for LHC \x96 e.g., by providing support for OpenFlow. This demonstration will show how this goal can be accomplished, using dynamic path provisioning and at least one Data Transfer Node (DTN) in the US connected to at least one DTN in Europe, transferring LHC data between tiers. This demonstration will show a network services model that matches the requirements of LHC high energy physics research with emerging capabilities for programmable networking. This demonstration will integrate programmable networking techniques, including the Network Service Interface (NSI), a protocol defined within the Open Grid Forum standards organization. Multiple LHC sites will transfer LHC data through DTNs. DTNs are edge nodes that are specifically designed to optimize for high performance data transport, they have no other function. The DTNs will be connected by layer 2 circuits, created through dynamic requests by NSI.

Below is a diagram of the wide-area network topology in use for the University of Michigan for SC15. Both the above demos are utilizing this infrastructure. We would like to thank our corporate partnets Dell, Adva, QLogic, Juniper and CenturyLink and well as ESnet and SciNet. Without their strong support we would have been unable to undertake these demos.

Demo Description: The next generation of major science programs face unprecedented challenges in harnessing the wealth of knowledge hidden in Exabytes of globally distributed scientific data. Researchers from Caltech, FIU, Stanford, Univ of Michigan, Vanderbilt, UCSD, UNESP, along with other partner teams have come together to meet these challenges, by leveraging the recent major advances in software defined and Terabit/sec networks, workflow optimization methodologies, and state of the art long distance data transfer methods. This demonstration focuses on network path-building and flow optimizations using SDN and intelligent traffic engineering techniques, built on top of a 100G OpenFlow ring at the show site and connected to remote sites including the Pacific Research Platform (PRP). Remote sites will be interconnected using dedicated WAN paths provisioned using NSI dynamic circuits. The demonstrations include (1) the use of Open vSwitch (OVS) to extend the wide area dynamic circuits storage to storage, with stable shaped flows at any level up to wire speed, (2) a pair of data transfer nodes (DTNs) designed for an aggregate 400Gbps flow through 100GE switches from Dell, Inventec and Mellanox, and (3) the use of Named Data Networking (NDN) to distribute and cache large high energy physics and climate science datasets.

We are also participating in the LHCONE Point2point Service with Data Transfer Nodes demonstration with our LHCONE partners

Demo description: LHCONE (LHC Open Network Environment) is an globally distributed specialized environment in which the large volumes of LHC data are transferred among different international LHC Tier (data center and analysis) sites. To date these transfers are conducted over the LHC Optical Private Network (LHCOPN, dedicated high capacity circuits between LHC Tier1s (data centers)) and via LHCONE, currently based on L2+VRF services. The LHCONE Point2Point Service is planning to future-proof ways of networking for LHC \x96 e.g., by providing support for OpenFlow. This demonstration will show how this goal can be accomplished, using dynamic path provisioning and at least one Data Transfer Node (DTN) in the US connected to at least one DTN in Europe, transferring LHC data between tiers. This demonstration will show a network services model that matches the requirements of LHC high energy physics research with emerging capabilities for programmable networking. This demonstration will integrate programmable networking techniques, including the Network Service Interface (NSI), a protocol defined within the Open Grid Forum standards organization. Multiple LHC sites will transfer LHC data through DTNs. DTNs are edge nodes that are specifically designed to optimize for high performance data transport, they have no other function. The DTNs will be connected by layer 2 circuits, created through dynamic requests by NSI.

Below is a diagram of the wide-area network topology in use for the University of Michigan for SC15. Both the above demos are utilizing this infrastructure. We would like to thank our corporate partnets Dell, Adva, QLogic, Juniper and CenturyLink and well as ESnet and SciNet. Without their strong support we would have been unable to undertake these demos.

Wiki Area for SC15 Technical Details

To help organize our efforts we have setup a WikiArea to keep track of our technical details including drawings, spreadsheets and documents.Presentations from SC15

To be filled in as we get presentations completed- Azher Mughal/Caltech, "Programming OpenFlow Flows for Scientific Profit"

- Kashik De/Univ Texas Arlington, "PANDA: Update on the ATLAS Global Workflow System and High Performance Networks"

- Shawn McKee/Univ of Michigan, "High-Performance Use of 100G Networks Using Software-Defined Networking and Minimal Infrastructure"

- Shawn McKee/Univ. of Michigan, "The OSiRIS Project"

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback