You are here: Foswiki>AGLT2 Web>NetworkPlanning (22 Feb 2011, RoyHockett)Edit Attach

Planning for the production network.

NetworkHardwareInfoNear-term To-Do List

Here is a list of network related items that need doing as of February 4, 2011:- Get ASN from ARIN (Shawn, Roy, Mark Driscoll helping?) - Waiting to here from Mark who volunteered to help us get an ASN. Cost is $500 plus $100/year after the first year. Needed to be able to optimize AGLT2 routing and paths.

- Enable VRF on Nile to allow us to utilize both the UltraLight and MiLR wavelengths.

- Enable MST on all AGLT2 switches. Currently Nile is running a single instance of MST. Need to (re)configure:

- Amazon, SW1, SW2, SW3, SW4, SW5, SW6, SW7, SW8, SW9, SW10, SW11, SW12, SW13 and SW14 to run MST

- Need to then optimize the MSTs created to separate traffic on redundant links, balancing traffic to the extent possible.

- VLAN 6 to be removed from Physics/Randall (Amazon, SW5, SW6, SW8 and no longer on links past Nile)

- Discards on Nile need investigating and fixing (See http://umopt1.grid.umich.edu/cacti/graph_view.php?action=tree&tree_id=14 )

- Nile blade #7 may be close to failing. Run diagnostics and get replaced if bad.

- Optimize 10GE port use on Nile for resiliency and performance (still some ports to relocate ?)

- Verify/upgrade firmware on SW1,SW2,SW3, SW4, SW7,SW11,SW12,SW13,SW14

- Get Amazon secondary supervisor in "warm" mode (currently "cold"?)

- Price to add "DYNES" fiber connection from Nile to R-BIN-MiLR?

- Price to add second fiber path between Nile and SW5?

- Determine how best to spend UM DYNEs network funds to support DYNES at UM.

Recent Network Changes

As of February 4, 2011 we have completed the following- Removed VLAN 562 (Physics) from both AGLT2_LSA and AGLT3_RANDALL. Replaced with VLAN 99 in LSA and VLAN 98 in RANDALL

- Removed VLAN 808 (Grid) from Randall/Physics (left as 808 at LSA). Replaced with VLAN 97 in RANDALL/Physics.

- Moved gateway for VLAN 6 (10.1.1.2) from Amazon to HRSP, primary hosted on Nile and secondary hosted on Amazon

- Moved gateway for VLAN 4010 (10.10.1.2) from Nile to HRSP, primary hosted on Nile and secondary hosted on Amazon

- Fixed spanning-tree costs on various switches (SW5, SW6 and Amazon)

- Enabled LAGs (EtherChannels) from SW9 to Nile (2x10GE) and SW10 to Nile (2x10GE)

- Relocated Nile ports to put the above LAGs on blades #2 and #7 (both have daughtercards) and off blade #8

- Updated Cacti monitoring for the configurations changes above

- Upgraded firmware on SW5, SW6, Nile and Amazon

Intro

Want to understand the network requirements and design so that the proper equipment can be purchased. This will require a fairly detailed design in order to avoid any hidden gotchas. We need to do this planning work to get the network rolled out. We should try to maintain flexibility in the network design to allow for possible changes in the T2 center. We should identify limitations that our equipment choices create. Areas to define are:- Hardware

- Lightpaths

- Routers

- Switches

- Computer interfaces

- IP Networks (This may be the area to plan first...)

- Grid connection (Starlight)

- Connection to remainder of Internet if needed, including local campus connections

- Private networks

- extended between two sites? how?

- VLANs

- Firewalls?

- Network services that we need to provide (DNS?)

- what is the config for the IP network (netmask, gateway, dns servers)

- For UM site: 192.41.230.0/24 192.41.230.1, use Merit DNS servers. For MSU site: 192.41.231.0/24, gateway TBD, Merit DNS

- how do the storage nodes connect, what devices do they connect to and what IP networks

- 2 GigE

- 1 10 GigE

- IPMI

- how do the compute nodes connect

- 2 GigE

- IPMI

- management nodes, how do they connect

- login (tier3 user) nodes, how do they connect

Diagrams

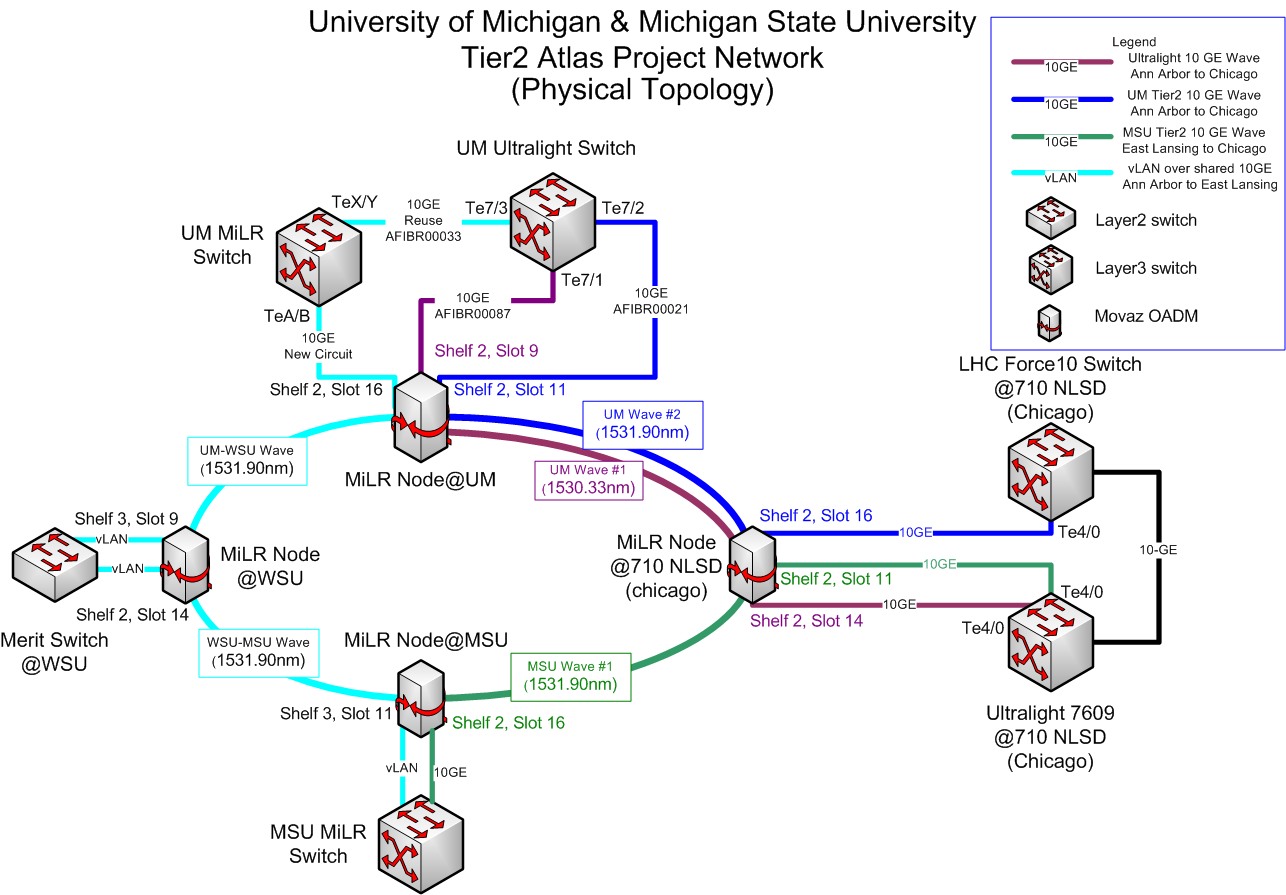

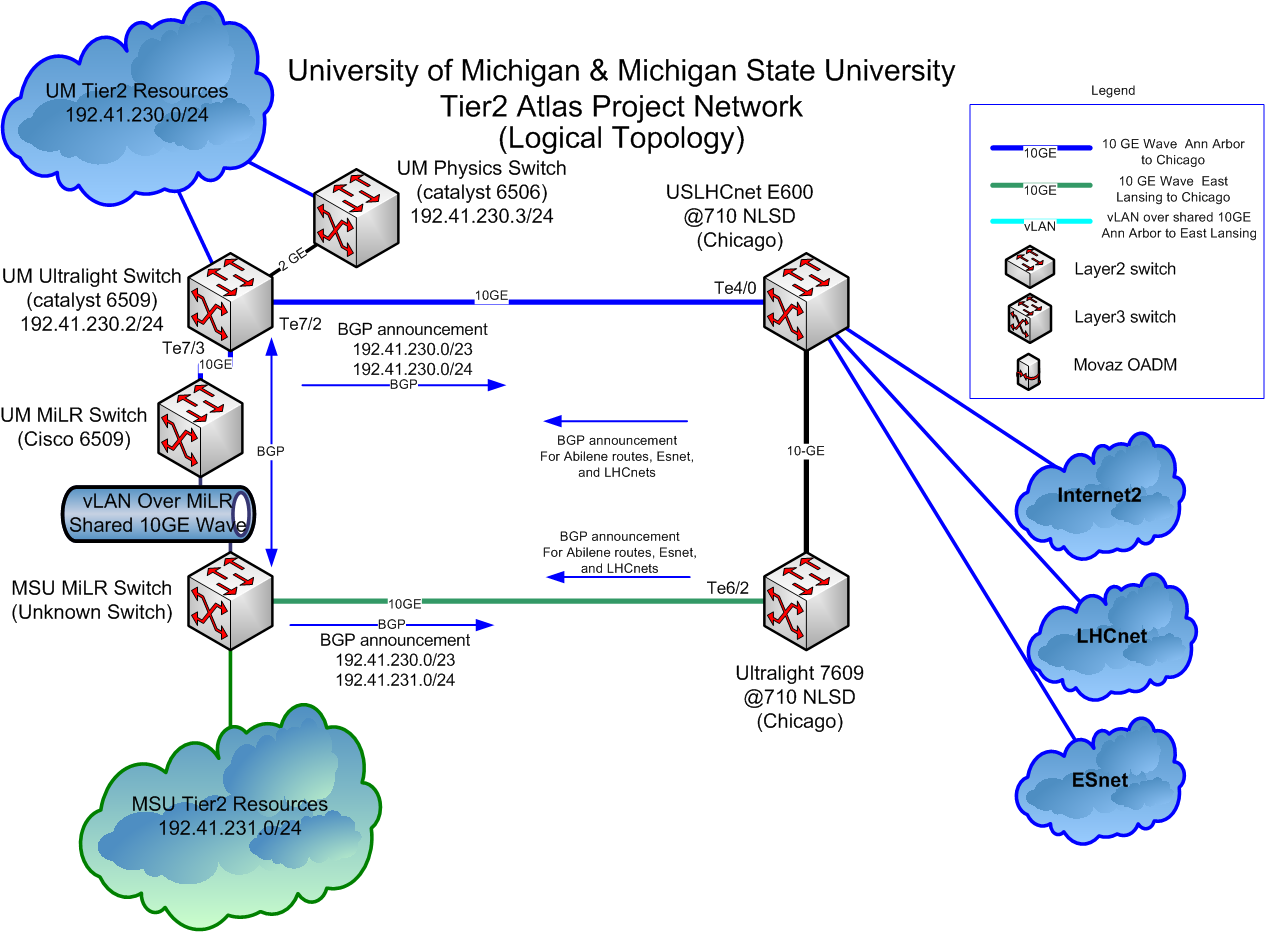

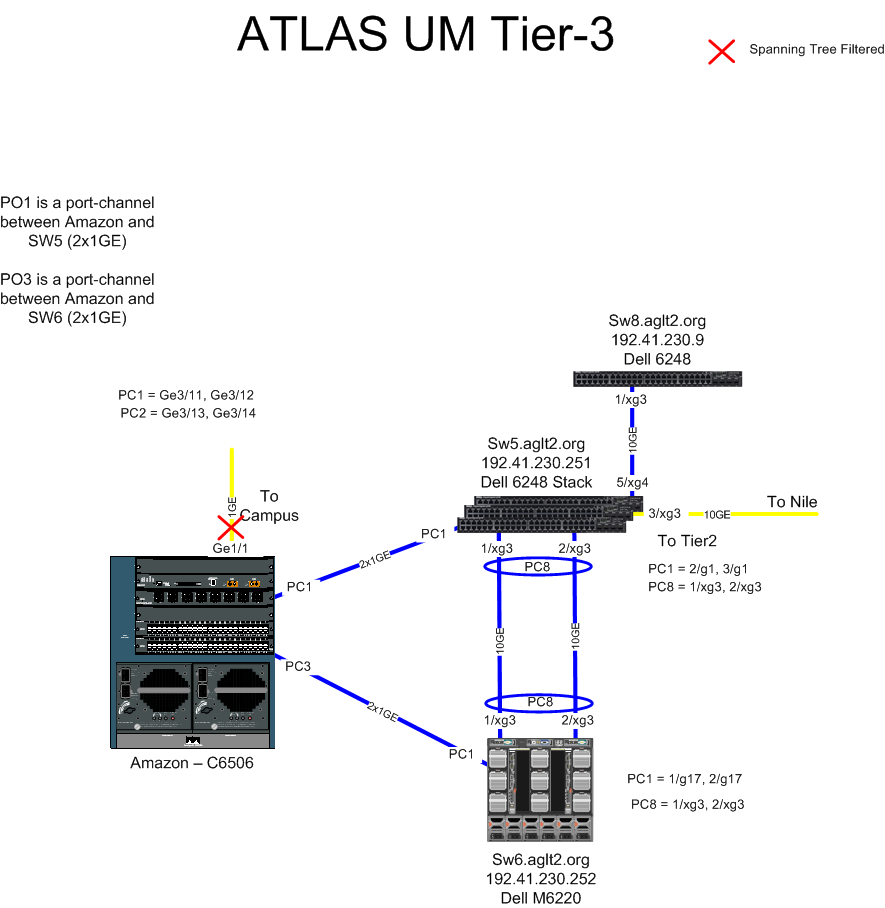

What to make some pictures:- Routers (including layer 3 switches) on the MiLR ring; want to include all layer2(any?) and layer3 devices on ring

- U-M detail

- MSU detail

- U-M LAN

- MSU LAN

| Name | Hardware |

|---|---|

| Ultralight | Cisco 6509-E, Sup720 |

| MSU Router | TBD |

| U-M | HP ProCurve 4100 |

| U-M | Cisco 6506, Sup2 (redundant) |

| USLHC | Force10 |

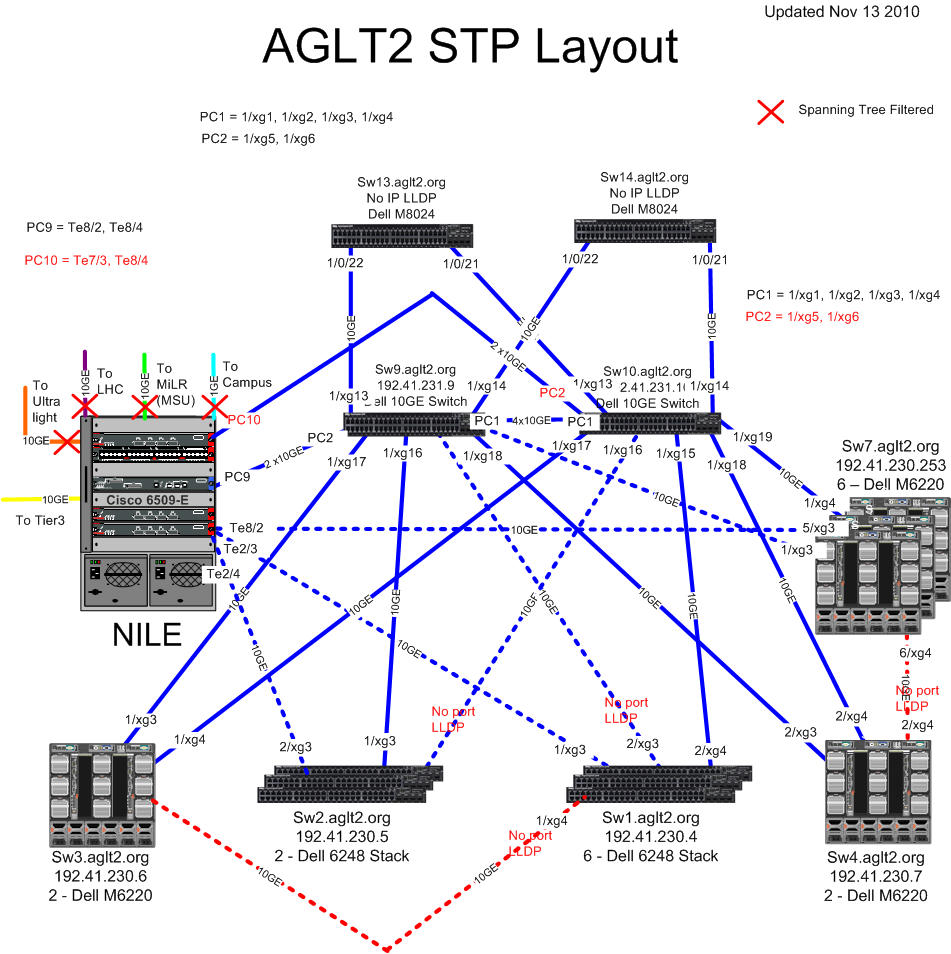

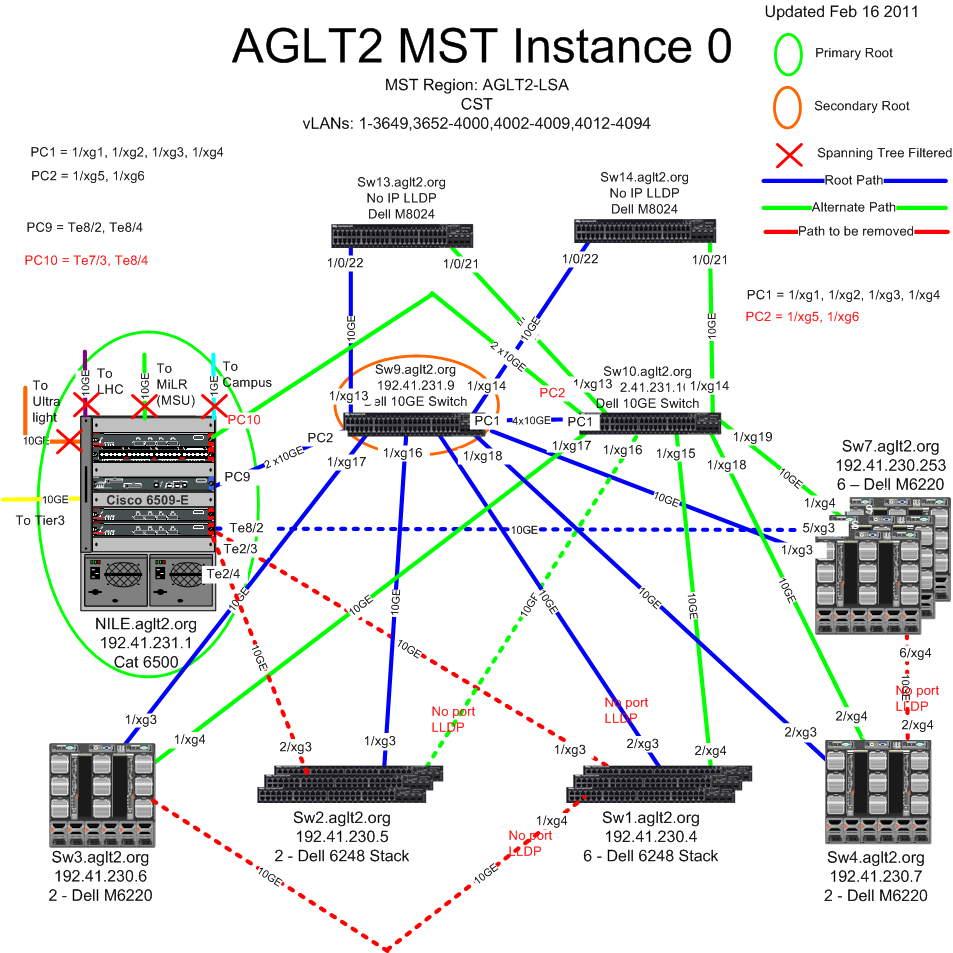

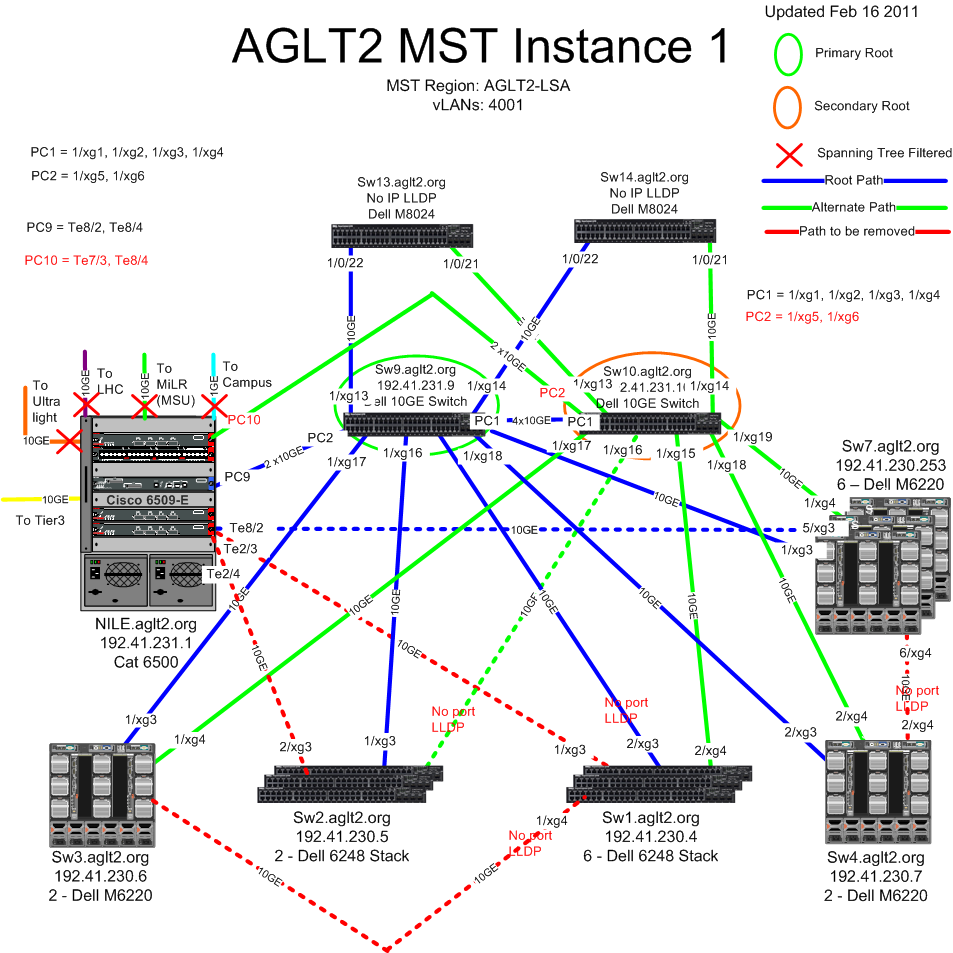

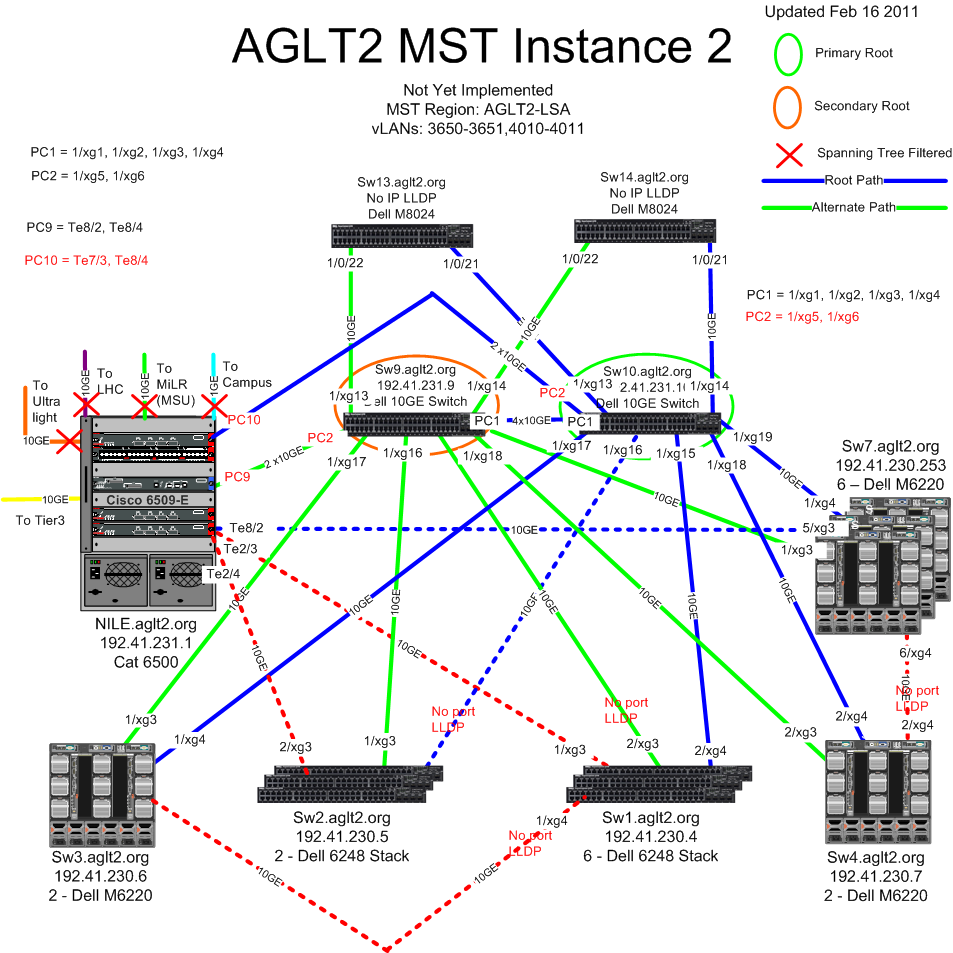

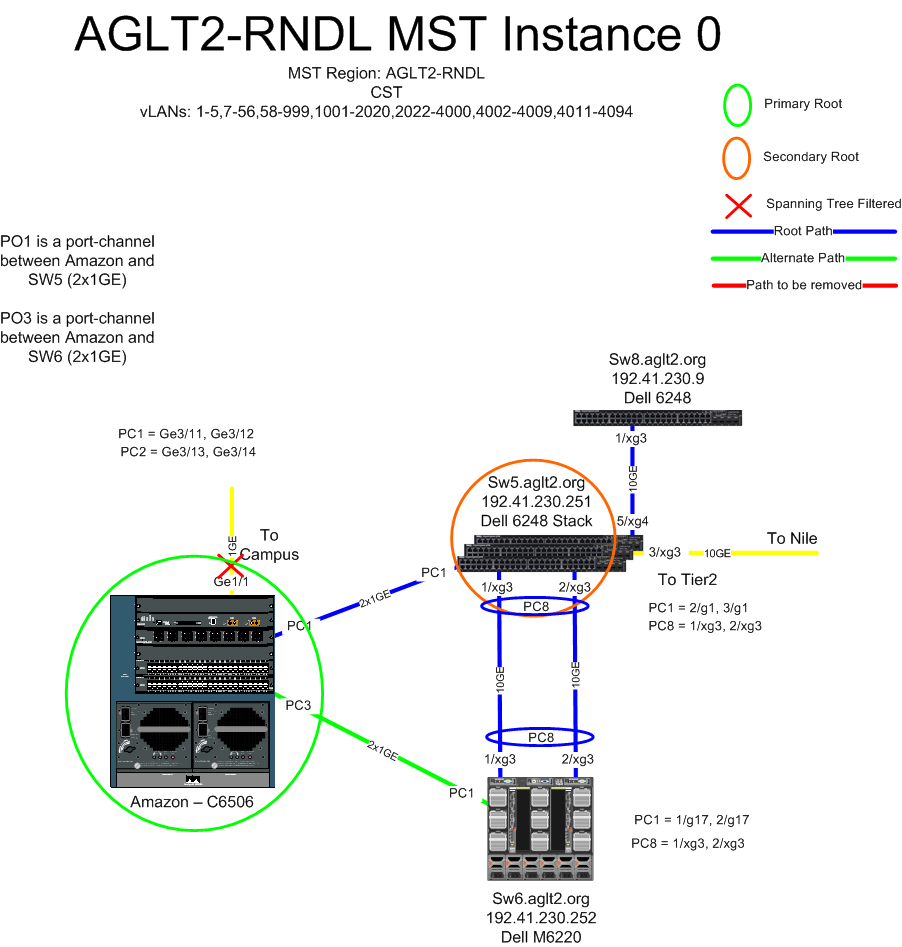

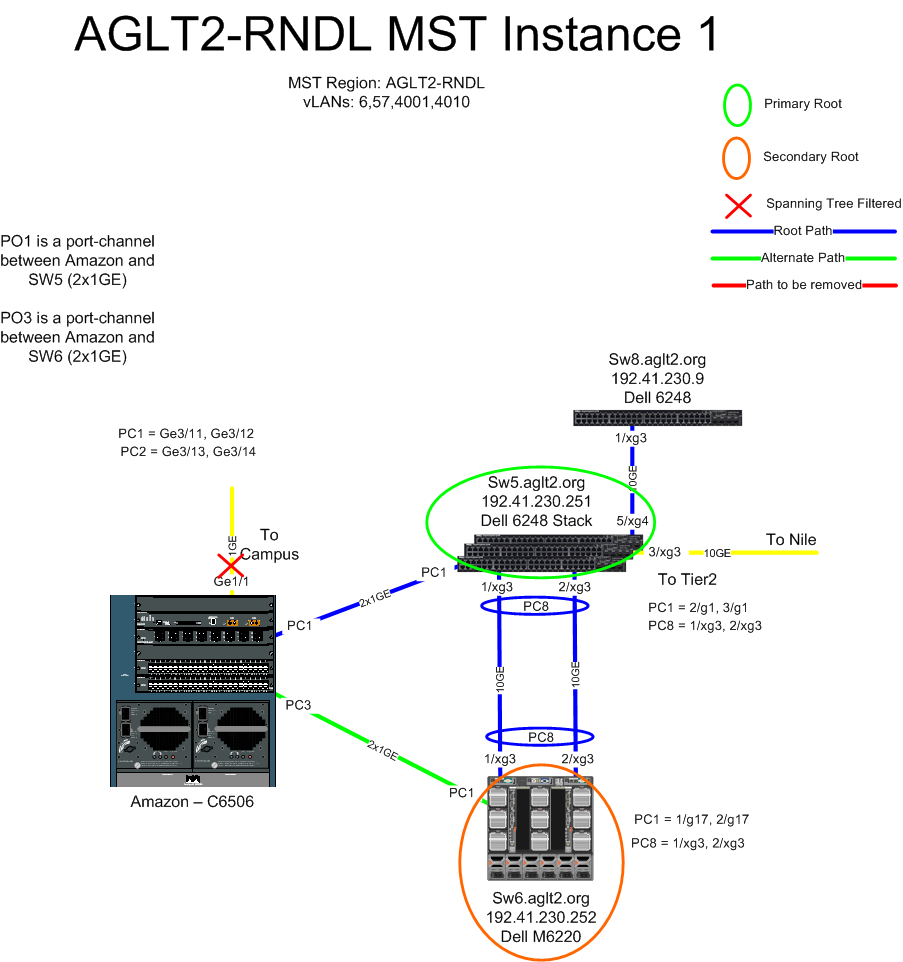

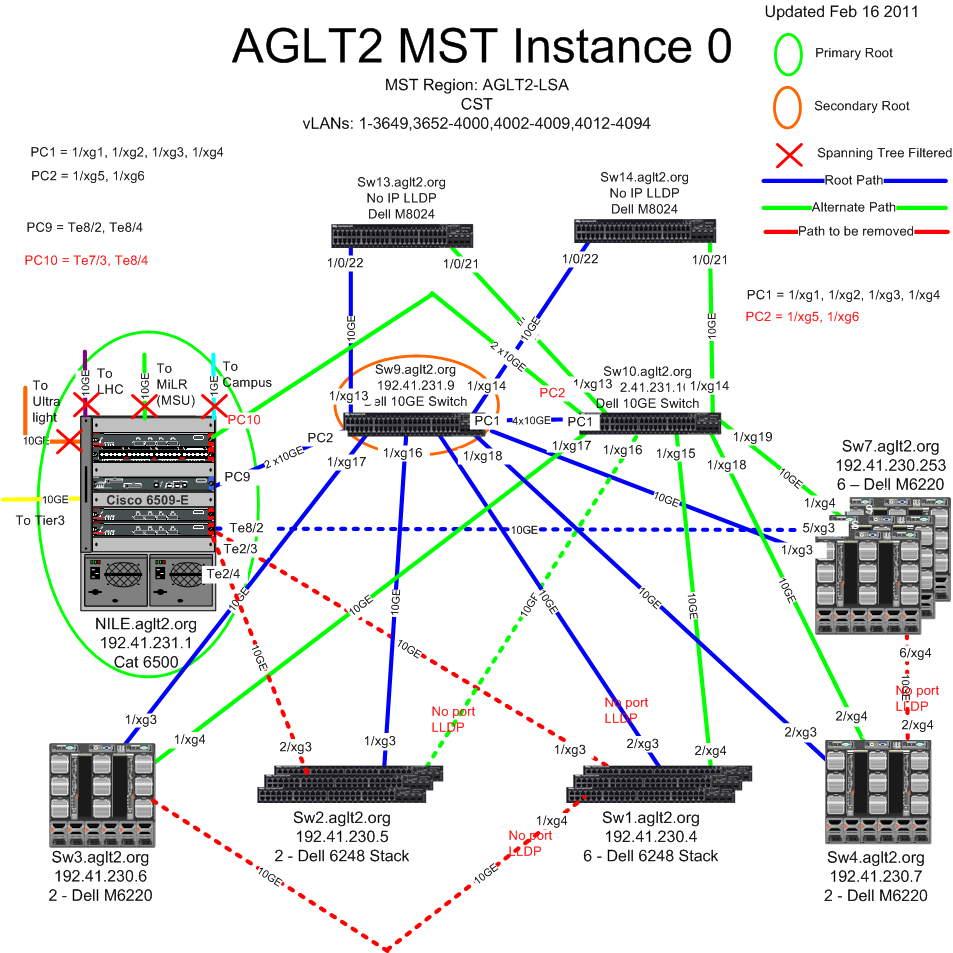

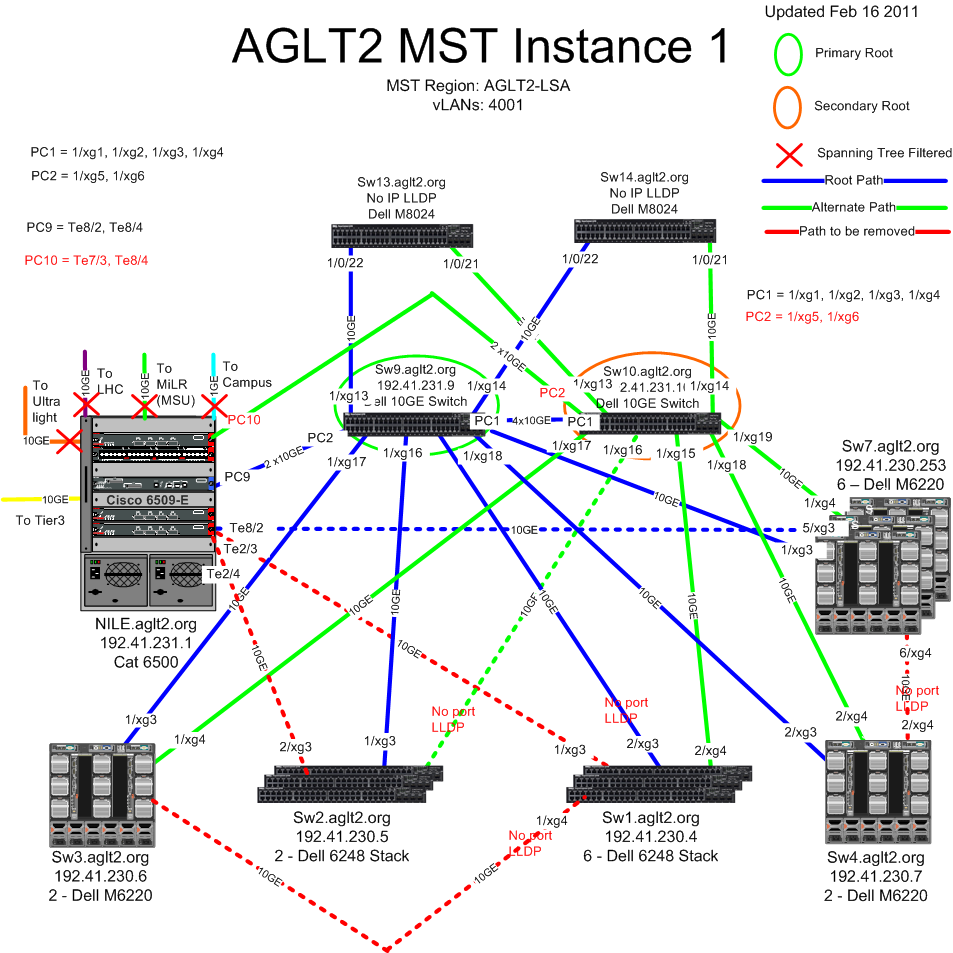

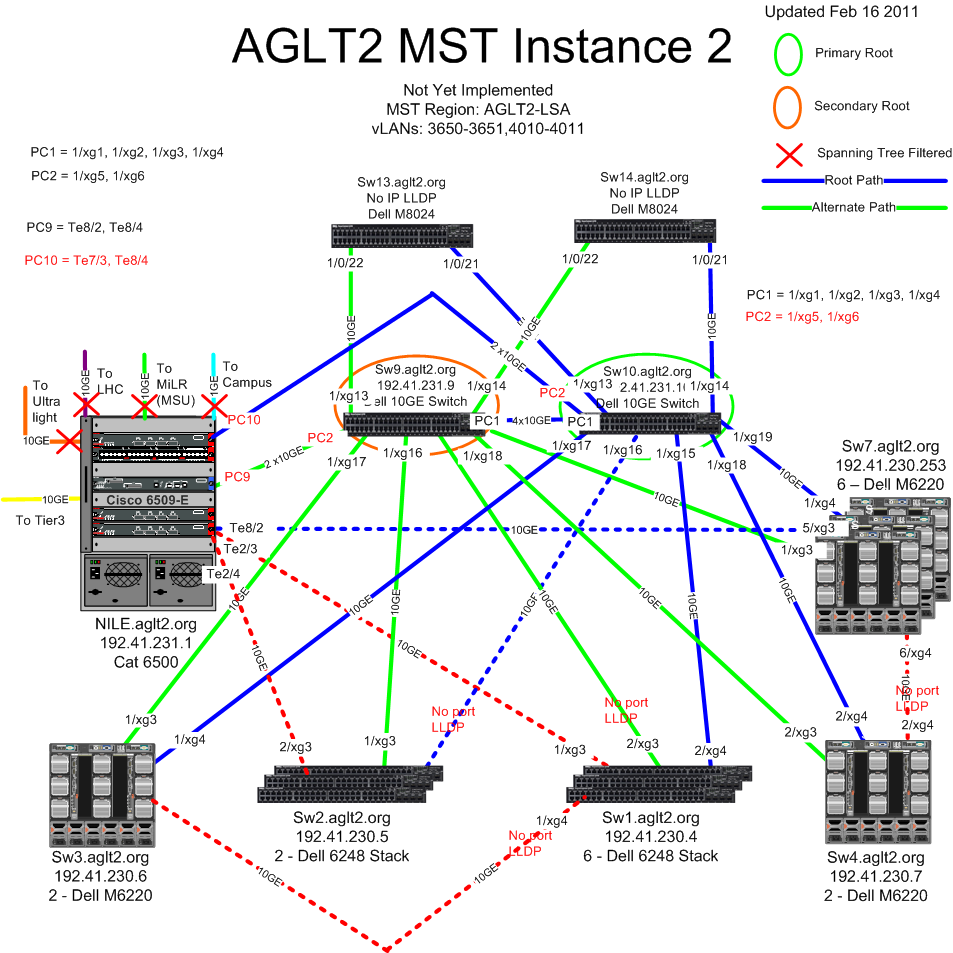

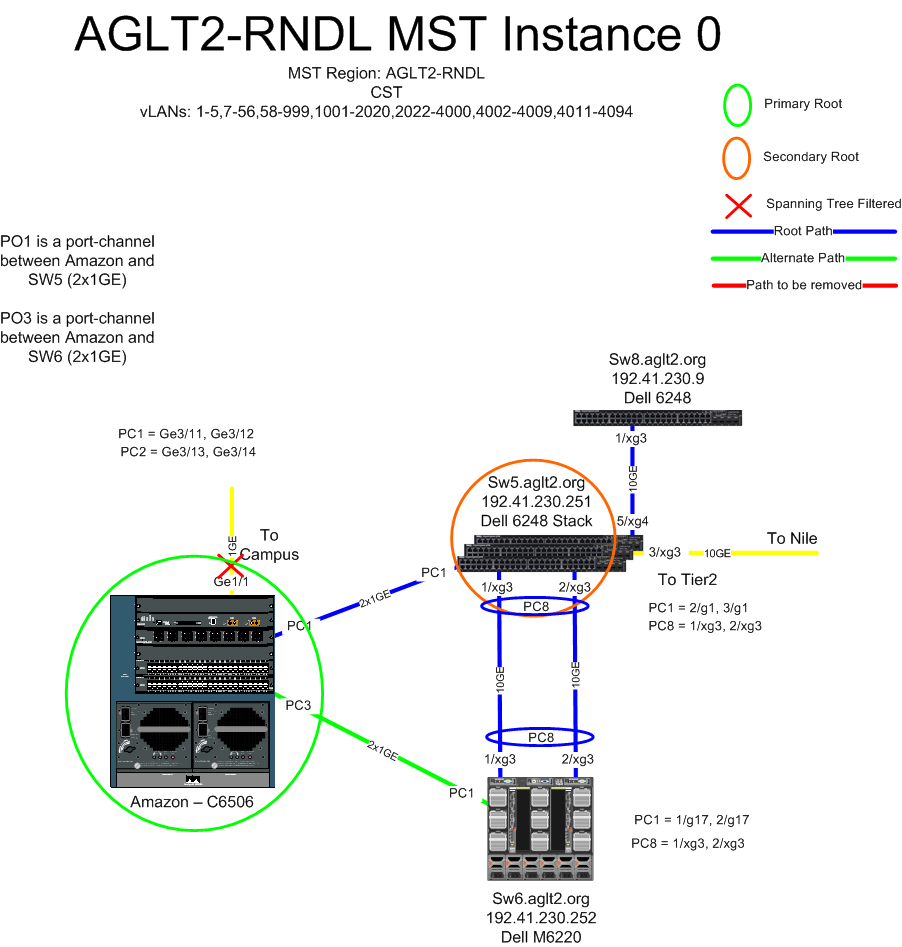

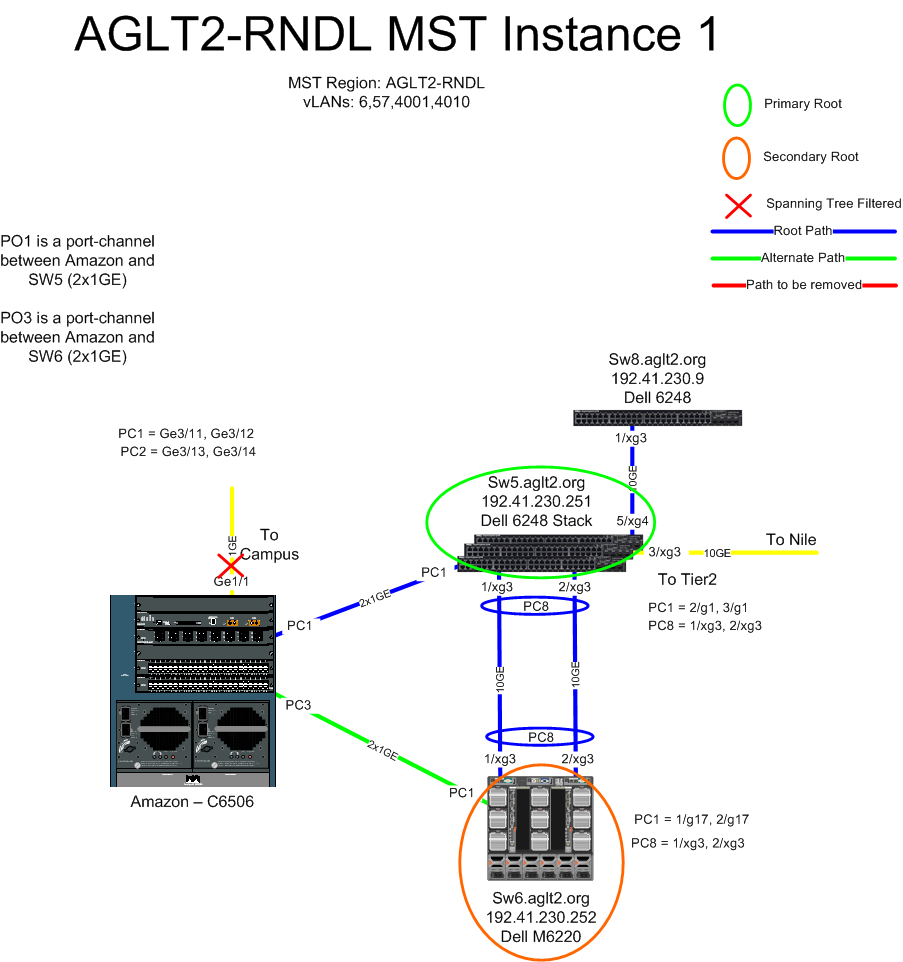

Spanning Tree Configuration

The AGLT2 UM networks are running Multiple Instance Spanning Tree, with two regions AGLT2-LSA and AGLT2-RNDL. Since UM runs PVST+, so in order to allow the MST to exist inside this PVST+, we pruned as many vLANs as possible so that there are no vLANs that would create a loop. We had to disable Spanning tree on the ports connecting to other networks.- AGLT2 LSA Spanning Tree Base:

- AGLT2 LSA MST Instance 0:

- AGLT2 LSA MST Instance 1:

- AGLT2 LSA MST Instance 2:

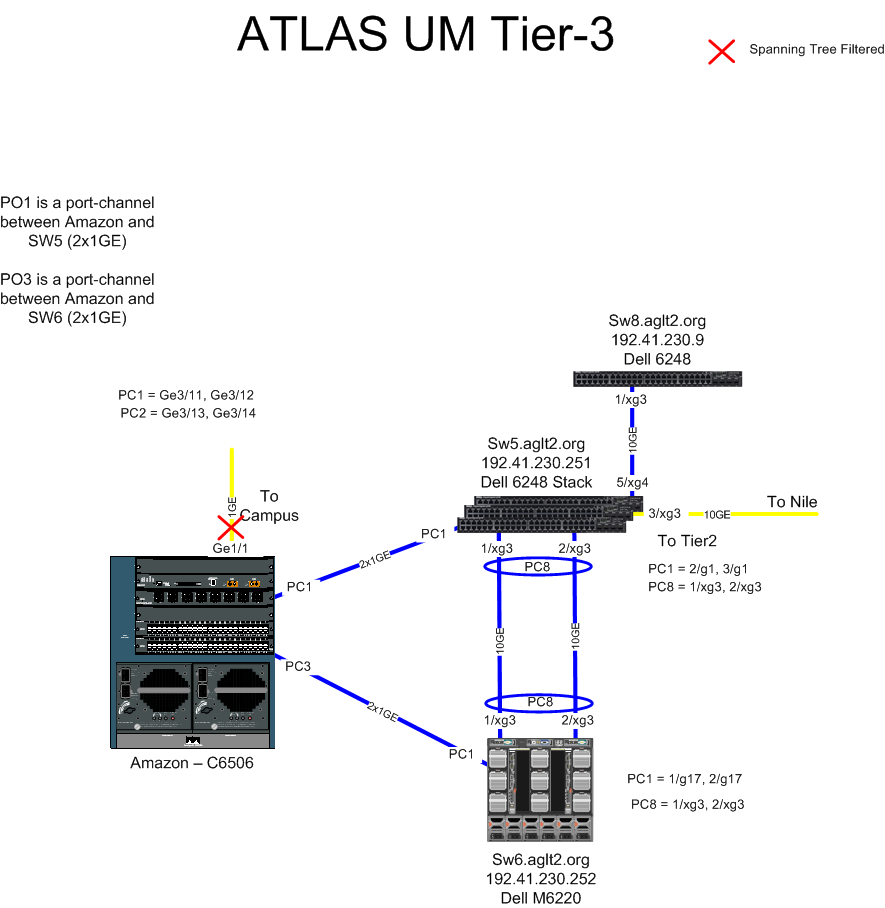

- AGLT2 RNDL Spanning Tree Base:

- AGLT2 RNDL MST Instance 0:

- AGLT2 RNDL MST Instance 1:

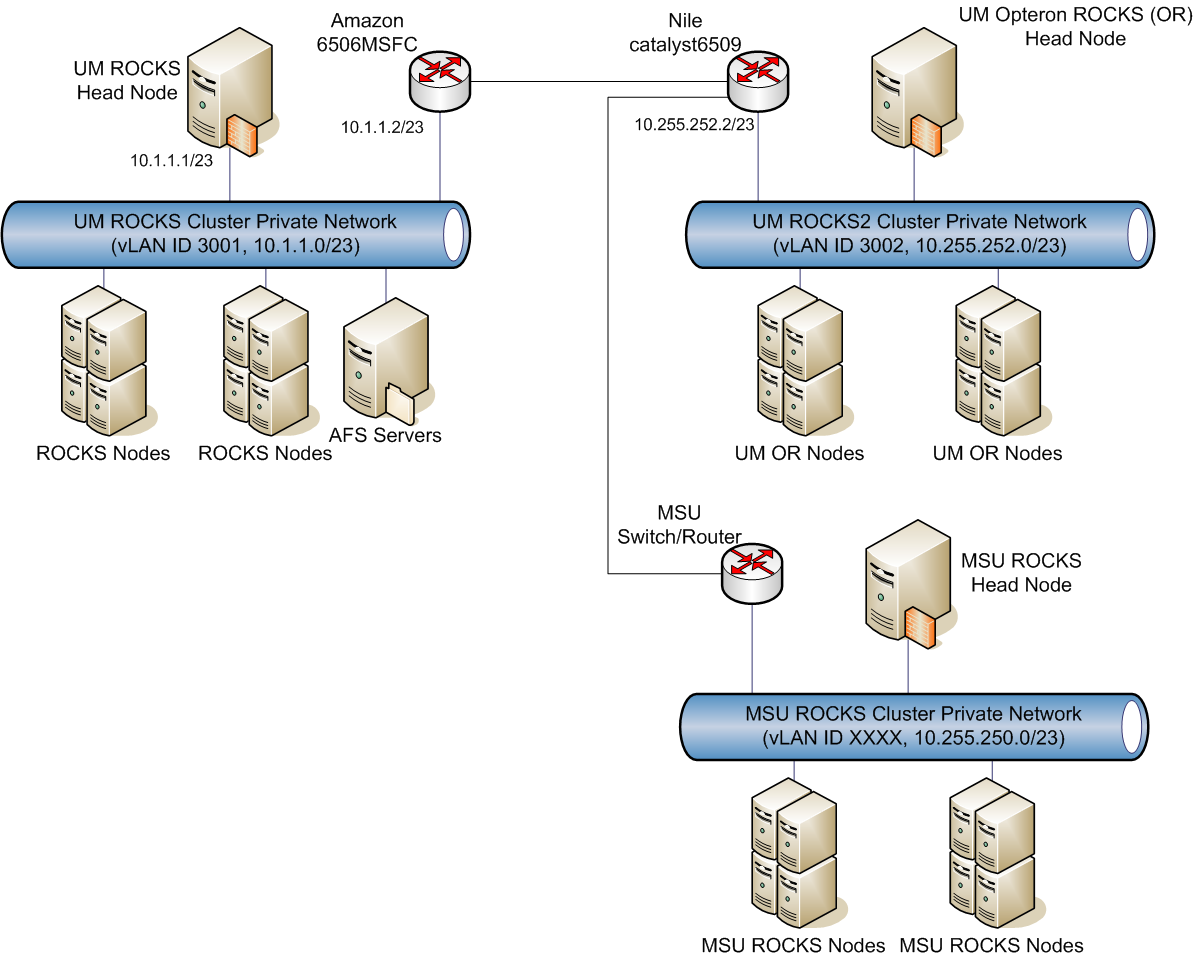

Private networks

The clusters at each site will "private" Ethernet networks connecting all nodes. Typically this is on the first Ethernet port on compute nodes (eth0). Most traffic internal to the cluster will be on this network. We plan to connect these networks between the two sites with routing established on the MiLR switches.| Site | Network | Gateway |

|---|---|---|

| MSU | 10.10.2.0/23 | 10.10.3.2 ??? |

| U-M | 10.10.0.0/23 | 10.10.1.2 |

Special Connections

We may also wish to bridge some protocols to allow things like PXE booting or SNMP queries to work across sites as if the two private LANs were one. What protocols or services (ports) do we want this for?Public Networks

The primary IPs for the AGLT2 are in the space 192.41.230.0/23.| Site | Network | Gateway |

|---|---|---|

| MSU | 192.41.231.0/24 | 192.41.231.1 ??? |

| U-M | 192.41.230.0/24 | 192.41.230.1 |

- Cluster_diagram:

- Network physical diagram:

- Logical network diagram:

- AGLT2 RNDL STP Base v3:

- AGLT2 LSA MST Instance 0:

- AGLT2 LSA MST Instance 1:

- AGLT2 LSA MST Instance 2:

- AGLT2 RNDL MST Instance 0:

- AGLT2 RNDL MST Instance 1:

| I | Attachment | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

AGLT2-LSA-MST-Instance-0.png | manage | 212 K | 22 Feb 2011 - 03:50 | RoyHockett | AGLT2 LSA MST Instance 0 |

| |

AGLT2-LSA-MST-Instance-1.png | manage | 207 K | 22 Feb 2011 - 03:51 | RoyHockett | AGLT2 LSA MST Instance 1 |

| |

AGLT2-LSA-MST-Instance-2.png | manage | 208 K | 22 Feb 2011 - 03:51 | RoyHockett | AGLT2 LSA MST Instance 2 |

| |

AGLT2-LSA-STP-Base-v3.png | manage | 192 K | 22 Feb 2011 - 03:36 | RoyHockett | AGLT2 LSA STP Base v3 |

| |

AGLT2-RNDL-MST-Instance-0.png | manage | 110 K | 22 Feb 2011 - 03:52 | RoyHockett | AGLT2 RNDL MST Instance 0 |

| |

AGLT2-RNDL-MST-Instance-1.png | manage | 109 K | 22 Feb 2011 - 03:52 | RoyHockett | AGLT2 RNDL MST Instance 1 |

| |

AGLT2-RNDL-STP-Base-v3.png | manage | 89 K | 22 Feb 2011 - 03:49 | RoyHockett | AGLT2 RNDL STP Base v3 |

| |

UM-MSU-Cluster-Layout.png | manage | 196 K | 05 Feb 2007 - 16:10 | ShawnMcKee | Cluster_diagram |

| |

UM-MSU-Logical.png | manage | 234 K | 05 Feb 2007 - 16:11 | ShawnMcKee | Logical network diagram |

| |

UM-MSU-Physical.png | manage | 171 K | 05 Feb 2007 - 16:11 | ShawnMcKee | Network physical diagram |

| |

UM-MSU-Tier2-Atlasv3.vsd | manage | 475 K | 06 Feb 2007 - 14:57 | ShawnMcKee | Visio source for diagrams |

| |

UM-MSU-Tier2-Logical.png | manage | 210 K | 06 Feb 2007 - 14:58 | ShawnMcKee | Logical network diagram |

Edit | Attach | Print version | History: r25 < r24 < r23 < r22 | Backlinks | View wiki text | Edit wiki text | More topic actions

Topic revision: r25 - 22 Feb 2011, RoyHockett

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback