You are here: Foswiki>AGLT2 Web>BenchMarks>Md3260Benchmark (10 Dec 2012, BenMeekhof)Edit Attach

Test command example

iozone -t6 -Rb test4-6threads-fs48G-rs512k-R6_MD2_DDP2.xls -r512k -s48G -F /md3260/R6_MD2_DDP2/io1.test /md3260/R6_MD2_DDP2/io2.test /md3260/R6_MD2_DDP2/io3.test /md3260/R6_MD2_DDP2/io4.test /md3260/R6_MD2_DDP2/io5.test /md3260/R6_MD2_DDP2/io6.testOptimization/tuning notes

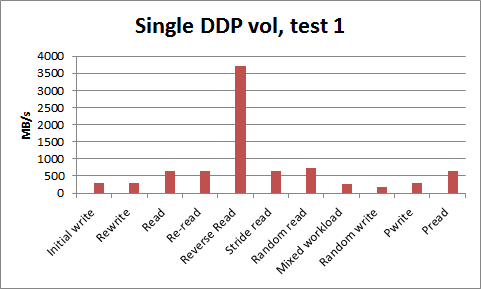

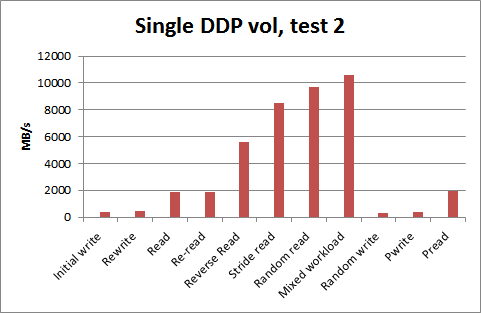

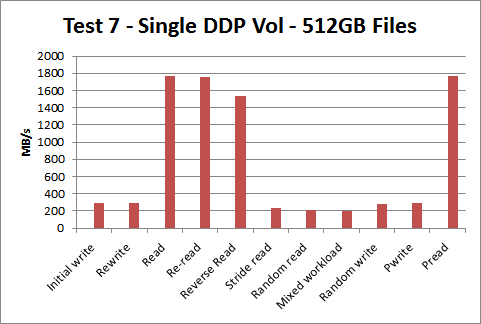

We increased cache block size from 4K default to 32K in "optimized" tests (all tests except the first). XFS filesystems were not created with sunit/swidth to match RAID stripes.Single DDP Volume without optimization

Single DDP Volume (48GB and 512GB files)

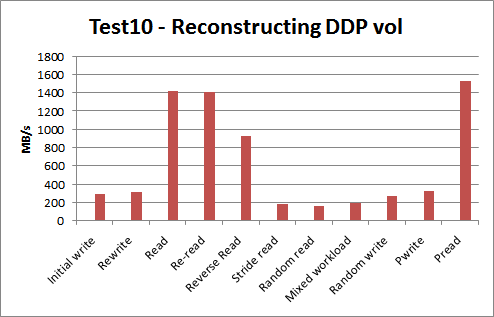

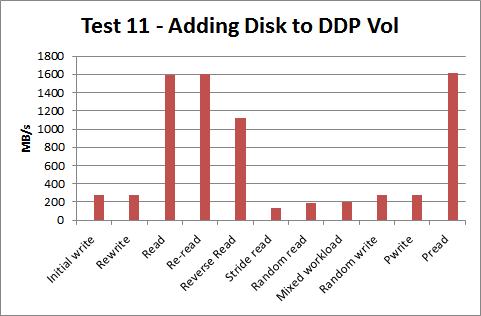

Reconstruction time (1 disk removed, array immediately begins this process on disk removal): 10:40 Rebuild time (after reconstruction, 1 disk marked online again): 10:43  |

|

|

|

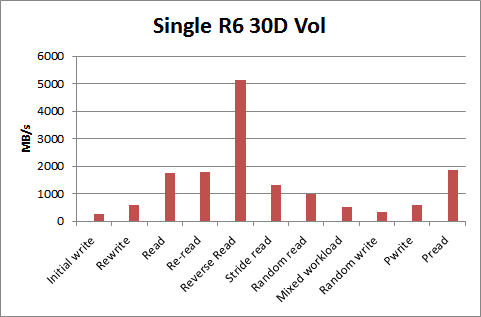

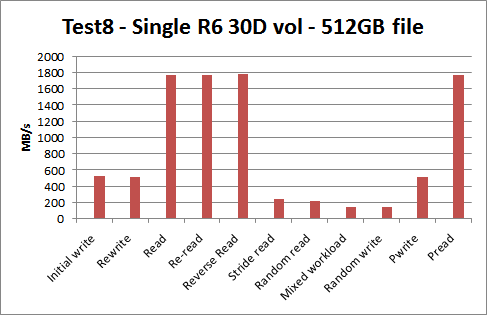

Single Raid 6 30D Volume (48GB and 512GB files)

Rebuild time (1 disk marked "fail" then triggered "manual rebuild" in GUI): 24:59  |

|

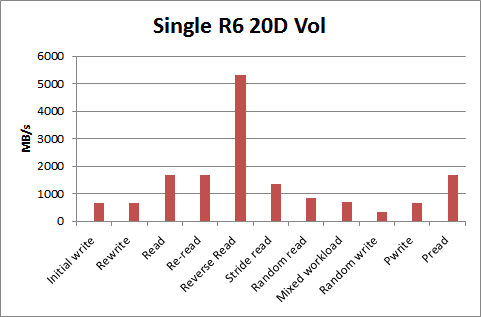

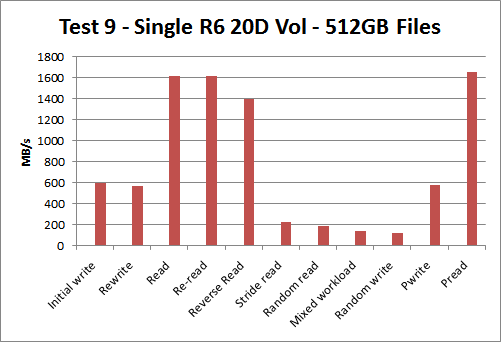

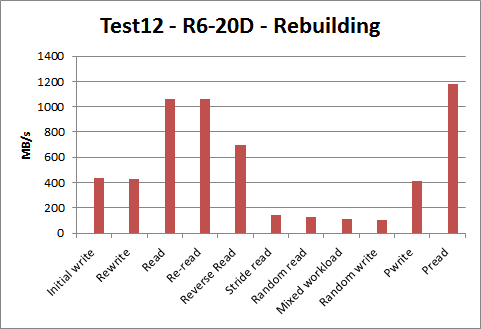

Single Raid 6 20D volume

Rebuild time (1 disk marked "fail" then triggered "manual rebuild" in GUI): 23:15

|

|

| I | Attachment |

Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

test10-6threads-fs512G-rs512k-R6_MD2_DDP2-rebuilding.png | manage | 12 K | 05 Dec 2012 - 18:28 | BenMeekhof | |

| |

test11-6threads-fs512G-rs512k-R6_MD2_DDP2-diskadd.png | manage | 13 K | 07 Dec 2012 - 22:03 | BenMeekhof | |

| |

test12-6threads-fs512G-rs512k-R620D-rebuilding.png | manage | 12 K | 10 Dec 2012 - 16:51 | BenMeekhof | |

| |

test3-6threads-fs48G-rs512k-R6_MD2_DDP2.png | manage | 11 K | 29 Nov 2012 - 17:49 | BenMeekhof | |

| |

test4-6threads-fs48G-rs512k-R6_MD2_DDP2.png | manage | 12 K | 29 Nov 2012 - 17:49 | BenMeekhof | |

| |

test5-6threads-fs48G-rs512k-R6_MD1_30D.png | manage | 12 K | 29 Nov 2012 - 17:49 | BenMeekhof | |

| |

test6-6threads-fs48G-rs512k-R6_MD1_20D.png | manage | 12 K | 29 Nov 2012 - 19:51 | BenMeekhof | |

| |

test7-6threads-fs512G-rs512k-R6_MD2_DDP2.png | manage | 14 K | 30 Nov 2012 - 21:16 | BenMeekhof | |

| |

test8-6threads-fs512G-rs512k-R6_MD1_30D.png | manage | 12 K | 02 Dec 2012 - 23:44 | BenMeekhof | |

| |

test9-6threads-fs512G-rs512k-R6_MD1_20D.png | manage | 13 K | 03 Dec 2012 - 21:45 | BenMeekhof |

Edit | Attach | Print version | History: r11 < r10 < r9 < r8 | Backlinks | View wiki text | Edit wiki text | More topic actions

Topic revision: r11 - 10 Dec 2012, BenMeekhof

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback